DC

Documentation: https://minipada.github.io/ros2_data_collection

Source code: https://github.com/minipada/ros2_data_collection

For detailed instructions:

- Setup

- Demos

- Concepts

- Data Pipeline

- Measurements

- Conditions

- Data validation

- Groups

- Destinations

- Configuration examples

- Infrastructure setup

- CLI tools

- Requirements

- Future work and Roadmap

- Contributing

- FAQ

- About and contact

Introduction

The DC (Data Collection) project aims at integrating data collection pipelines into ROS 2. The goal is to integrate data collection pipelines with existing APIs to enable data analytics, rather than live monitoring, which already has excellent tools available. As companies increasingly turn to autonomous robots, the ability to understand and improve operations for any type of machine in any environment has become crucial. This involves mostly pick and drop and inspection operations. This framework aims at helping collecting, validating (through JSON schemas) and sending reliably the data to create such APIs and dashboards.

DC uses a modular approach, based on pluginlib and greatly inspired by Nav2 for its architecture. Pluginlib is used to configure which measurements are collected and where the data goes. Measurements and destinations are pluginlib plugins. In addition to pluginlib, most plugins use Fluent Bit in the backend: Fluent Bit is a super fast, lightweight, and highly scalable logging and metrics processor and forwarder. It is the preferred choice for cloud and containerized environments. Developed and interfaced in C, it has already many features we directly can use, especially: high performance, reliability and data integrity (backpressure handling and data buffering in memory and filesystem).

Why collect data from robots?

- Performance Monitoring: Collecting data from a robot allows you to monitor its performance and identify areas for improvement. For example, you can use data to analyze the robot's motion and identify areas where it may be experiencing issues or inefficiencies.

- Fault Diagnosis: Data collection can also be used to diagnose faults and troubleshoot issues with the robot. By collecting data on various aspects of the robot's behavior, you can identify patterns or anomalies that may indicate problems with the system.

- Machine Learning: Data collected from robots can be used to train machine learning models, which can be used to improve the robot's performance and behavior. For example, you can use data collected from sensors to train models for object detection or path planning.

- Research and Development: Data collection is important for research and development in robotics. By collecting data on the behavior of robots in different scenarios, researchers can gain insights into how robots can be designed and optimized for different applications.

- Inventory Management: Data collection can be used to monitor inventory levels and track the movement of goods within a warehouse. This can help managers identify which products are in high demand and optimize the placement of products to improve order fulfillment times.

- Resource Allocation: Data collection can also help managers allocate resources more efficiently. For example, by monitoring the movement of people and goods within a warehouse, managers can identify bottlenecks and areas of congestion and adjust staffing and equipment allocation to address these issues.

- Process Improvement: Data collection can be used to monitor and analyze the performance of various processes within a warehouse. By identifying areas of inefficiency or errors, managers can develop strategies for improving these processes and increasing productivity.

- Predictive Maintenance: Data collection can be used to monitor the performance of equipment and identify potential maintenance issues before they occur. This can help managers schedule maintenance more effectively and avoid costly downtime due to equipment failure.

Main features

- Open source: Currently all tools on the market are not open source. This project is in MPL-2.0 license, in summary you can use without asking permission and without paying

- Modular approach: based on pluginlib and greatly inspired by Nav2 for its architecture

- Reliable data collection: validate and send data to create APIs and dashboards

- Flexible data collection: set polling interval for each measurement or collect every measurement with StringStamped messages

- Customizable validation: validate data using existing or customized JSON schemas

- Easy to extend: add new measurements or destinations by simply adding a plugin

- Flexible data collection conditions: collect data based on conditions such as whether the robot is moving or if a field is equal to a value

- Trigger-based data collection: collect data when a defined set of combination of all, any, or no condition are met

- Customizable record collection: configure the number of records to collect at the start and when a condition is activated.

- Data inspection: inspect data from camera input including barcode and QR codes

- Fast and efficient: high performance, using Fluent Bit for backend processing, and designed to minimize code duplication and reduce human errors

- Grouped measurements: measurements can be grouped using the group node based on the ApproximateTimeSynchronizer

- File saving: files can be saved, including map_server maps, camera images, and any file produced by a measurement

- Easy to use: designed to be easy to learn and use

- No C++ 3rd party library required: all 3rd party libraries have a vendor package in the repository

And inherited from Fluent Bit:

Here is an example of a pipeline:

flowchart LR

pl_camera1["Camera bottom"]

pl_camera2["Camera middle"]

pl_camera3["Camera top"]

pl_condition_moving["Moving"]

pl_cpu["CPU"]

pl_cmd_vel["Command velocity"]

pl_memory["Memory"]

pl_position["Position"]

pl_speed["Speed"]

pl_storage["Storage"]

pl_uptime["Uptime"]

pl_network["Network"]

pl_network_boot["Network"]

pl_os_boot["OS"]

subgraph m_n["Measurement node"]

subgraph cond["Condition plugins"]

pl_condition_moving

end

subgraph measurements["Measurement plugins"]

pl_camera1

pl_camera2

pl_camera3

pl_cpu

pl_cmd_vel

pl_memory

pl_position

pl_speed

pl_storage

pl_uptime

pl_network

pl_network_boot

pl_os_boot

end

end

subgraph g_n["Group node"]

gr_boot_system["System (boot)"]

gr_system["System"]

gr_robot["Robot"]

gr_inspection["Inspection"]

end

subgraph d_n["Destination node"]

pl_pgsql["PostgreSQL"]

pl_minio["Minio"]

pl_s3["S3"]

end

pl_camera1 -- if not --> pl_condition_moving --> gr_inspection

pl_camera2 -- if not --> pl_condition_moving --> gr_inspection

pl_camera3 -- if not --> pl_condition_moving --> gr_inspection

pl_cpu --> gr_system

pl_memory --> gr_system

pl_uptime --> gr_boot_system

pl_network_boot --> gr_boot_system

pl_os_boot --> gr_boot_system

pl_storage --> gr_system

pl_cmd_vel --> gr_robot

pl_position --> gr_robot

pl_speed --> gr_robot

pl_network -- Network ping and online status --> pl_pgsql

gr_boot_system -- os, network interfaces\n, permissions and uptime --> pl_pgsql

gr_robot -- Robot cmd_vel, position. speed --> pl_pgsql

gr_system -- Available space,\n memory used and cpu usage --> pl_pgsql

gr_inspection -- Image paths on s3 and minio --> pl_pgsql

gr_inspection -- Raw, rotated and/or inspected images --> pl_minio

gr_inspection -- Raw, rotated and/or inspected images --> pl_s3

License

This program is under the terms of the Mozilla Public License Version 2.0.

About and Contact

For any inquiry, please contact David (d.bensoussan@proton.me). If your inquiry relates to bugs or open-source feature requests, consider posting a ticket on our GitHub project. If your inquiry relates to configuration support or private feature development, reach out and we will be able to support you in your projects.

Setup

Use docker

Available images

Docker images with latest code are available on the Docker public registry:

| Image | Description |

|---|---|

| minipada/ros2_data_collection:humble-ci | ROS base image augmented with all DC dependencies to use for CI |

| minipada/ros2_data_collection:humble-ci-testing | CI image using the ROS testing repository |

| minipada/ros2_data_collection:humble-source | DC source compiled |

| minipada/ros2_data_collection:humble-source-sim | DC source compiled with all simulation packages |

| minipada/ros2_data_collection:humble-doc | Documentation |

Get any by running:

docker pull minipada/ros2_data_collection:<TAG>

Docker workflow

When developing, use the source-sim image with a docker compose file. The latest one is available on the repository:

version: "3.9"

services:

ros2-data-collection:

image: minipada/ros2_data_collection:humble-source-sim

privileged: true

container_name: ros2-data-collection

environment:

- QT_X11_NO_MITSHM=1

- NVIDIA_VISIBLE_DEVICES=all

- NVIDIA_DRIVER_CAPABILITIES=compute,utility,display

- DISPLAY=unix$DISPLAY

- XAUTHORITY=$XAUTHORITY

volumes:

- ${HOME}/.Xauthority:/root/.Xauthority

- /tmp/.X11-unix:/tmp/.X11-unix

- /var/run/docker.sock:/var/run/docker.sock

- ${PWD}:/root/ws/src/ros2_data_collection

network_mode: "host"

restart: "unless-stopped"

stop_grace_period: "3s"

runtime: nvidia

command: tail -F anything

working_dir: /root/ws

Then, to allow GUI (RViz, Gazebo) to work in the container, execute:

xhost +

Then start the container:

docker compose up -d

And get in the container with:

docker exec -it ros2-data-collection /ros_entrypoint.sh bash

Build from source

Download the repository

Given that there is no apt packages available, you will need to build from source.

Download the repository in your workspace

git clone github.com/minipada/ros2_data_collection.git

Install dependencies

Install system and C/C++ dependencies

rosdep install --from-paths src --ignore-src -r -y

Some packages are external C++ packages but a vendor package has been included in the repository so colcon will handle it. In addition, since this set of packages has no python external dependencies, you won't need anything else.

Install python dependencies

This project uses poetry to manage python dependencies. It is easy to use and it is possible to set each package version like in a requirements.txt and manage multiple python environments. If you have poetry on your machine, you can execute:

poetry install

If not, you can install python dependencies from the provided requirements.txt:

pip3 install -r requirements.txt

Build

colcon build

Run

source install/setup.bash

ros2 launch dc_bringup bringup.launch.py

Issues

If you run into any issues when building ROS 2 Data Collection, you can use the search tool in the issues tab on GitHub and always feel free to open a ticket.

Demos

We will go together through some demos to get started with DC. You shall find them in the dc_demos package

Make sure you built and sourced the workspace.

Have you checked the configuration examples before running the demos? They will help understand how the demo configuration work.

| Title | Description |

|---|---|

| Uptime | Collect how long the system has been running and print it on Stdout. Minimal example |

| Group memory and uptime | Collect both memory and uptime and group them in a dictionary |

| Turtlebot3 Stdout | Collect command velocity, map, position and speed and print it in stdout |

| Turtlebot3 AWS Warehouse MinIO PostgreSQL | Collect system, robot, environment and infrastructure data and send it to MinIO and PostgreSQL |

| Turtlebot3 AWS Warehouse InfluxDB | Collect system, robot, environment and infrastructure data and send it to InfluxDB |

| Turtlebot3 QR codes | Collect QR codes and images |

| Custom plugin | Create an external plugin |

Note that each demo assumes concepts explained in previous demos will be acknowledged.

Uptime to stdout

This is the most minimal example to run DC, it collects the system uptime every 5 seconds and sends it to Stdout.

Let's run it:

ros2 launch dc_demos uptime_stdout.launch.py

At the end, the data is displayed:

[component_container_isolated-1] [{"date":1677668906.745817,"time":92395,"id":"be781e5ffb1e7ee4f817fe7b63e92c32","robot_name":"C3PO","run_id":"218"}]

[component_container_isolated-1] [{"date":1677668911.700309,"time":92400,"id":"be781e5ffb1e7ee4f817fe7b63e92c32","robot_name":"C3PO","run_id":"218"}]

[component_container_isolated-1] [{"date":1677668916.70031,"time":92405,"id":"be781e5ffb1e7ee4f817fe7b63e92c32","robot_name":"C3PO","run_id":"218"}]

[component_container_isolated-1] [{"date":1677668921.700388,"time":92410,"id":"be781e5ffb1e7ee4f817fe7b63e92c32","robot_name":"C3PO","run_id":"218"}]

[component_container_isolated-1] [{"date":1677668926.700422,"time":92415,"id":"be781e5ffb1e7ee4f817fe7b63e92c32","robot_name":"C3PO","run_id":"218"}]

This launchfile is a wrapper of dc_bringup/launch/bringup.launch.py which loads a custom yaml configuration

Configuration

Measurement

measurement_server:

ros__parameters:

measurement_plugins: ["uptime"]

uptime:

plugin: "dc_measurements/Uptime"

topic_output: "/dc/measurement/uptime"

polling_interval: 5000

enable_validator: true

debug: true

tags: ["flb_stdout"]

init_collect: true

custom_str_params_list: ["robot_name", "id"]

custom_str_params:

robot_name:

name: robot_name

value: C3PO

id:

name: id

value_from_file: /etc/machine-id

run_id:

enabled: true

counter: true

counter_path: "$HOME/run_id"

uuid: false

measurement_plugins (Mandatory): List all the plugins to enable. This is a custom string that is equal to the measurement plugin dictionary present in the same level. If not listed, will not be loaded.

uptime.plugin (Mandatory): Name of the plugin, if you are not sure which plugin is available, use the CLI tool to list them

uptime.tags (Mandatory): This is used by Fluent Bit, to match inputs and outputs. More here

uptime.polling_interval (Optional): Interval to which data is collected in milliseconds

uptime.enable_validator (Optional): Will validate the data against a JSON schema. This file is located in the dc_measurements package. You can provide your own using the json_schema_path parameter, which we will explore later on

uptime.debug (Optional): More verbose output

uptime.init_collect (Optional): Collect when the node starts instead of waiting for the polling_interval time to pass

run_id.enabled (Optional): Identify which run the robot is. A new one is generated at every start of the node. Uses either a counter that increment at each restart of the node or UUID

run_id.counter (Optional): Enable counter for the run_id

run_id.counter_path (Optional): Path to store the last run. It is expanded with environment variables id

run_id.uuid (Optional): Generate a new run ID by using a random UUID

This will collect the uptime every 5 seconds (including when the node starts), will forward it to the flb_stdout destination.

Inject custom data for each record

Here, we want to append some content in every record: the robot name and its ID. While the robot name comes from a fixed variable in the parameter file, the id comes from the machine-id file.

custom_str_params_list (Optional): Look for those keys in this configuration to add them as keys and values in each record.

custom_str_params.robot_name (Optional): This parameter is loaded since it is mentioned in custom_str_params_list

custom_str_params.robot_name.name (Optional): Key in the dictionary to add

custom_str_params.robot_name.value (Optional): Value associated to the key in the dictionary to add

custom_str_params.id.name (Optional): Key in the dictionary to add

custom_str_params.id.value_from_file (Optional): Value associated to the key in the dictionary to add taken from the content of a file

Note that this configuration alone, sent to Fluent Bit will not display the JSON on stdout since it requires the destination_server configuration

Find the complete measurements documentation here

Destination

destination_server:

ros__parameters:

flb:

flush: 1

flb_grace: 1

log_level: "info"

storage_path: "/var/log/flb-storage/"

storage_sync: "full"

storage_checksum: "off"

storage_backlog_mem_limit: "1M"

scheduler_cap: 200

scheduler_base: 5

http_server: true

http_listen: "0.0.0.0"

http_port: 2020

in_storage_type: "filesystem"

in_storage_pause_on_chunks_overlimit: "off"

destination_plugins: ["flb_stdout"]

flb_stdout:

plugin: "dc_destinations/FlbStdout"

inputs: ["/dc/measurement/uptime"]

time_format: "double"

time_key: "date"

debug: false

Destinations

Let's analyze piece by piece. First, we select the plugin to enable, create its section containing the plugin name and the topic to subscribe to. We need the topic list because in the background, the ROS 2 Fluent Bit plugin subscribes to each topic.

destination_plugins (Mandatory): List all the plugins to enable. This is a custom string that is equal to the destination plugin dictionary present in the same level. If not listed, will not be loaded.

flb_stdout.plugin (Mandatory): Plugin to load

flb_stdout.inputs (Mandatory): Topics to which to listen to get the data

flb_stdout.time_format (Optional): Format the data will be printed

flb_stdout.time_key (Optional): Dictionary key from which date will be taken from

flb_stdout.debug (Optional): Verbose output

Fluent Bit

Then, we configure Fluent Bit. This is not necessary but it is easy to do by using the ros parameters provided by the node.

flb.flush (Optional): Interval to flush output (seconds)

flb.flb_grace (Optional): Wait time (seconds) on exit

flb.log_level (Optional): Diagnostic level (error/warning/info/debug/trace)

flb.storage_path (Optional): Set an optional location in the file system to store streams and chunks of data. If this parameter is not set, Input plugins can only use in-memory buffering.

flb.storage_sync (Optional): Configure the synchronization mode used to store the data into the file system. It can take the values normal or full.

flb.storage_checksum (Optional): Enable the data integrity check when writing and reading data from the filesystem. The storage layer uses the CRC32 algorithm.

flb.storage_backlog_mem_limit (Optional): If storage.path is set, Fluent Bit will look for data chunks that were not delivered and are still in the storage layer, these are called backlog data. This option configure a hint of maximum value of memory to use when processing these records.

flb.scheduler_cap (Optional): Set a maximum retry time in seconds. The property is supported from v1.8.7.

flb.scheduler_base (Optional): Set a base of exponential backoff. The property is supported from v1.8.7.

flb.http_server (Optional): If true enable statistics HTTP server

flb.http_listen (Optional): Address to listen (e.g. 0.0.0.0)

flb.http_port (Optional): Port to listen (e.g. 8888)

flb.in_storage_type (Optional): Specifies the buffering mechanism to use. It can be memory or filesystem.

flb.in_storage_pause_on_chunks_overlimit (Optional): Specifies if file storage is to be paused when reaching the chunk limit.

Inject run id at each record

Finally, we set the run id. This is used later on when fetching data for a run. It can come from a counter which is incremented at each start of the node or from a random UUID generated. The counter mechanism writes and read on a file on the system (take care of not deleting it), you can set its path as a parameter.

Find the complete destinations documentation here

Console output

Now that the node started, let us see what's displayed in the console

[component_container_isolated-1] [INFO] [1677668906.621492168] [dc_container]: Load Library: /root/ws/install/dc_measurements/lib/libmeasurement_server_core.so

[component_container_isolated-1] [INFO] [1677668906.634130562] [dc_container]: Found class: rclcpp_components::NodeFactoryTemplate<measurement_server::MeasurementServer>

[component_container_isolated-1] [INFO] [1677668906.634246977] [dc_container]: Instantiate class: rclcpp_components::NodeFactoryTemplate<measurement_server::MeasurementServer>

[component_container_isolated-1] [INFO] [1677668906.655803692] [measurement_server]:

measurement_server lifecycle node launched.

Waiting on external lifecycle transitions to activate

See https://design.ros2.org/articles/node_lifecycle.html for more information.

[component_container_isolated-1] [INFO] [1677668906.670233932] [dc_container]: Load Library: /root/ws/install/dc_destinations/lib/libdestination_server_core.so

[component_container_isolated-1] [INFO] [1677668906.675826543] [dc_container]: Found class: rclcpp_components::NodeFactoryTemplate<destination_server::DestinationServer>

[component_container_isolated-1] [INFO] [1677668906.675864319] [dc_container]: Instantiate class: rclcpp_components::NodeFactoryTemplate<destination_server::DestinationServer>

[component_container_isolated-1] [INFO] [1677668906.680499515] [destination_server]:

[component_container_isolated-1] destination_server lifecycle node launched.

[component_container_isolated-1] Waiting on external lifecycle transitions to activate

[component_container_isolated-1] See https://design.ros2.org/articles/node_lifecycle.html for more information.

[INFO] [launch_ros.actions.load_composable_nodes]: Loaded node '/destination_server' in container 'dc_container'

[component_container_isolated-1] [INFO] [1677668906.684802172] [dc_container]: Load Library: /opt/ros/humble/lib/libnav2_lifecycle_manager_core.so

[component_container_isolated-1] [INFO] [1677668906.685487672] [dc_container]: Found class: rclcpp_components::NodeFactoryTemplate<nav2_lifecycle_manager::LifecycleManager>

[component_container_isolated-1] [INFO] [1677668906.685510016] [dc_container]: Instantiate class: rclcpp_components::NodeFactoryTemplate<nav2_lifecycle_manager::LifecycleManager>

[component_container_isolated-1] [INFO] [1677668906.691075881] [lifecycle_manager_navigation]: Creating

[INFO] [launch_ros.actions.load_composable_nodes]: Loaded node '/lifecycle_manager_navigation' in container 'dc_container'

[component_container_isolated-1] [INFO] [1677668906.692954854] [lifecycle_manager_navigation]: Creating and initializing lifecycle service clients

[component_container_isolated-1] [INFO] [1677668906.695025050] [lifecycle_manager_navigation]: Starting managed nodes bringup...

Measurement server and destination server are starting in the Lifecycle, you can read more about it here

Afterward, the measurement_server starts:

[component_container_isolated-1] [INFO] [1677668906.695056624] [lifecycle_manager_navigation]: Configuring measurement_server

[component_container_isolated-1] [INFO] [1677668906.695181755] [measurement_server]: Configuring

[component_container_isolated-1] [INFO] [1677668906.698019687] [measurement_server]: Creating measurement plugin uptime: Type dc_measurements/Uptime, Group key: uptime, Polling interval: 5000, Debug: 1, Validator enabled: 1, Schema path: , Tags: [flb_stdout], Init collect: 1, Init Max measurement: 0, Include measurement name: 0, Include measurement plugin name: 0, Remote keys: , Remote prefixes: , Include measurement plugin name: 0, Max measurement on condition: 0, If all condition: , If any condition: , If none condition:

[component_container_isolated-1] [INFO] [1677668906.698932685] [measurement_server]: Configuring uptime

[component_container_isolated-1] [INFO] [1677668906.699937310] [measurement_server]: Done configuring uptime

[component_container_isolated-1] [INFO] [1677668906.700162355] [measurement_server]: Looking for schema at /root/ws/install/dc_measurements/share/dc_measurements/plugins/measurements/json/uptime.json

[component_container_isolated-1] [INFO] [1677668906.700192161] [measurement_server]: schema: {"$schema":"http://json-schema.org/draft-07/schema#","description":"Time the system has been up","properties":{"time":{"description":"Time the system has been up","minimum":0,"type":"integer"}},"title":"Uptime","type":"object"}

Plugins are loaded one by one (here only one is, the uptime one) and configured. The configuration for each is displayed and the validation schema is also loaded and its path printed.

Then, the destination_server starts:

[component_container_isolated-1] [INFO] [1677668906.700528260] [lifecycle_manager_navigation]: Configuring destination_server

[component_container_isolated-1] [INFO] [1677668906.700620104] [destination_server]: Configuring

[component_container_isolated-1] [INFO] [1677668906.701246047] [destination_server]: Fluent Bit service initialized

[component_container_isolated-1] [INFO] [1677668906.701470896] [destination_server]: Creating destination plugin flb_stdout: Type dc_destinations/FlbStdout, Debug: 0, Time format: double. Time key: date

[component_container_isolated-1] [INFO] [1677668906.702670279] [destination_server]: Configuring Flb plugin flb_stdout

[component_container_isolated-1] [INFO] [1677668906.702871127] [destination_server]: Loaded lua filter. Match=ros2, code=function concatenate(tag, timestamp, record) if (type(record["tags"]) == "table") then record["tags"] = table.concat(record["tags"], ",") end return 2, timestamp, record end

[component_container_isolated-1] [INFO] [1677668906.702912686] [destination_server]: Loaded rewrite_tag filter. Match=ros2, Rule=$tags .*(flb_stdout).* flb_stdout true

[component_container_isolated-1] [INFO] [1677668906.703021628] [destination_server]: Done configuring Flb plugin flb_stdout

[component_container_isolated-1] [INFO] [1677668906.703049312] [destination_server]: Loading input ros2 shared library /root/ws/install/fluent_bit_plugins/lib/flb-in_ros2.so...

[component_container_isolated-1] [INFO] [1677668906.703818806] [destination_server]: Loaded input ros2 shared library /root/ws/install/fluent_bit_plugins/lib/flb-in_ros2.so

[component_container_isolated-1] [INFO] [1677668906.703899346] [destination_server]: Flb ros2 plugin initialized. ret=0

[component_container_isolated-1] [INFO] [1677668906.703908977] [destination_server]: Starting Flb engine...

[component_container_isolated-1] [2023/03/01 11:08:26] [ info] [fluent bit] version=2.0.7, commit=1ab360f79c, pid=31126

[component_container_isolated-1] [2023/03/01 11:08:26] [ info] [storage] ver=1.3.0, type=memory+filesystem, sync=full, checksum=off, max_chunks_up=128

[component_container_isolated-1] [2023/03/01 11:08:26] [ info] [storage] backlog input plugin: storage_backlog.1

[component_container_isolated-1] [2023/03/01 11:08:26] [ info] [cmetrics] version=0.5.7

[component_container_isolated-1] [2023/03/01 11:08:26] [ info] [ctraces ] version=0.2.5

[component_container_isolated-1] [2023/03/01 11:08:26] [ info] [input:ros2:ros2.0] initializing

[component_container_isolated-1] [2023/03/01 11:08:26] [ info] [input:ros2:ros2.0] storage_strategy='filesystem' (memory + filesystem)

[component_container_isolated-1] [2023/03/01 11:08:26] [ info] Started node fluentbit_rclc

[component_container_isolated-1] [2023/03/01 11:08:26] [ info] Created subscriber /dc/measurement/uptime

[component_container_isolated-1] [2023/03/01 11:08:26] [ info] [input:storage_backlog:storage_backlog.1] initializing

[component_container_isolated-1] [2023/03/01 11:08:26] [ info] [input:storage_backlog:storage_backlog.1] storage_strategy='memory' (memory only)

[component_container_isolated-1] [2023/03/01 11:08:26] [ info] [input:storage_backlog:storage_backlog.1] queue memory limit: 976.6K

[component_container_isolated-1] [2023/03/01 11:08:26] [ info] [input:emitter:emitter_for_rewrite_tag.2] initializing

[component_container_isolated-1] [2023/03/01 11:08:26] [ info] [input:emitter:emitter_for_rewrite_tag.2] storage_strategy='filesystem' (memory + filesystem)

[component_container_isolated-1] [2023/03/01 11:08:26] [ info] [output:stdout:stdout.0] worker #0 started

[component_container_isolated-1] [2023/03/01 11:08:26] [ info] [http_server] listen iface=0.0.0.0 tcp_port=2020

[component_container_isolated-1] [2023/03/01 11:08:26] [ info] [sp] stream processor started

[component_container_isolated-1] [INFO] [1677668906.744577163] [destination_server]: Started Flb engine

It starts the FlbStdout destination plugin, filters used internally by Fluent to edit Timestamps and tags. It then loads the ROS 2 Fluent Bit shared library and initialize it with the topics we provided as parameter.

Afterward, Fluent Bit starts, it prints its version, storage strategy and buffer configuration and lists which plugins are loaded.

Then, the measurement and destination server nodes are activated:

[component_container_isolated-1] [INFO] [1677668906.745180268] [lifecycle_manager_navigation]: Activating measurement_server

[component_container_isolated-1] [INFO] [1677668906.745462265] [measurement_server]: Activating

[component_container_isolated-1] [INFO] [1677668906.745558665] [measurement_server]: Activating uptime

[component_container_isolated-1] [INFO] [1677668906.746014639] [measurement_server]: Creating bond (measurement_server) to lifecycle manager.

[component_container_isolated-1] [INFO] [1677668906.853199899] [lifecycle_manager_navigation]: Server measurement_server connected with bond.

[component_container_isolated-1] [INFO] [1677668906.853316226] [lifecycle_manager_navigation]: Activating destination_server

[component_container_isolated-1] [INFO] [1677668906.853620571] [destination_server]: Activating

[component_container_isolated-1] [INFO] [1677668906.853722659] [destination_server]: Activating flb_stdout

[component_container_isolated-1] [INFO] [1677668906.853796078] [destination_server]: Creating bond (destination_server) to lifecycle manager.

[component_container_isolated-1] [INFO] [1677668906.961666207] [lifecycle_manager_navigation]: Server destination_server connected with bond.

[component_container_isolated-1] [INFO] [1677668906.961777773] [lifecycle_manager_navigation]: Managed nodes are active

[component_container_isolated-1] [INFO] [1677668906.961820078] [lifecycle_manager_navigation]: Creating bond timer...

Finally, we see the data:

[component_container_isolated-1] [{"date":1677668906.745817,"time":92395,"id":"be781e5ffb1e7ee4f817fe7b63e92c32","robot_name":"C3PO","run_id":"218"}]

[component_container_isolated-1] [{"date":1677668911.700309,"time":92400,"id":"be781e5ffb1e7ee4f817fe7b63e92c32","robot_name":"C3PO","run_id":"218"}]

[component_container_isolated-1] [{"date":1677668916.70031,"time":92405,"id":"be781e5ffb1e7ee4f817fe7b63e92c32","robot_name":"C3PO","run_id":"218"}]

[component_container_isolated-1] [{"date":1677668921.700388,"time":92410,"id":"be781e5ffb1e7ee4f817fe7b63e92c32","robot_name":"C3PO","run_id":"218"}]

[component_container_isolated-1] [{"date":1677668926.700422,"time":92415,"id":"be781e5ffb1e7ee4f817fe7b63e92c32","robot_name":"C3PO","run_id":"218"}]

So...what happened?

- The measurement plugin starts publishing data to /dc/measurement/uptime, which contains the JSON, tags and timestamp of the message

- Run ID and robot_name is appended in the JSON

- The ROS 2 Fluent Bit plugin, which subscribes to this topic, receive the data and pass it on to Fluent Bit

- Fluent Bit receives it, applies the timestamp filter (which modifies the timestamp to the desired format)

- Fluent Bit applies changes the tag with another filter. This tag is used to match to the destination afterward.

- Data is flushed

- The Fluent Bit stdout output plugin receives the data because tags match (the flb_stdout tag is used) and forwards it to Stdout

Group memory and uptime

This demo will introduce the group node. It subscribes to multiple nodes and group for each its data and republishes on a new topic.

Let's run it:

ros2 launch dc_demos group_memory_uptime_stdout.launch.py

[component_container_isolated-1] [{"memory":{"used":71.67569732666016},"date":1677673405.921659,"uptime":{"time":96894},"run_id":"221"}]

[component_container_isolated-1] [{"memory":{"used":71.70790100097656},"date":1677673408.868847,"uptime":{"time":96897},"run_id":"221"}]

[component_container_isolated-1] [{"memory":{"used":71.72582244873047},"date":1677673411.869299,"uptime":{"time":96900},"run_id":"221"}]

[component_container_isolated-1] [{"memory":{"used":71.86643218994141},"date":1677673414.869292,"uptime":{"time":96903},"run_id":"221"}]

[component_container_isolated-1] [{"memory":{"used":71.82553863525391},"date":1677673417.869207,"uptime":{"time":96906},"run_id":"221"}]

This launchfile is a wrapper of dc_bringup/launch/bringup.launch.py which loads a custom yaml configuration

Note that here the group node is started. It is one parameter in the launchfile to enable it. In the uptime demo, it is disabled by default because it is not used.

Configuration

Measurement

We collect data from 2 plugins: memory and uptime. The first every second and the latter every 3.

measurement_server:

ros__parameters:

measurement_plugins: ["memory", "uptime"]

memory:

plugin: "dc_measurements/Memory"

group_key: "memory"

topic_output: "/dc/measurement/memory"

polling_interval: 1000

uptime:

plugin: "dc_measurements/Uptime"

group_key: "uptime"

topic_output: "/dc/measurement/uptime"

polling_interval: 3000

Data is now published on 2 ROS topics: /dc/measurement/uptime and /dc/measurement/memory.

The group_key mentioned will be used by the group node to assign a key in the new dictionary

Group

This create a memory_uptime group, subscribes to /dc/measurement/memory and /dc/measurement/uptime topics and republish the result on /dc/group/memory_uptime. The sync_delay allows to wait in a 5 seconds window timeframe the data from each topic before throwing away the data if one topic does not publish it

group_server:

ros__parameters:

groups: ["memory_uptime"]

memory_uptime:

inputs: ["/dc/measurement/memory", "/dc/measurement/uptime"]

output: "/dc/group/memory_uptime"

sync_delay: 5.0

group_key: "memory_uptime"

tags: ["flb_stdout"]

include_group_name: false

include_group_name (Optional): Will include the name of the group in the JSON, it makes it easier later on to fetch the data from your API. In this case, we can skip it since we only have one group to show.

You can also notice that the group also has a "group_key". It means a group can be part of another.

Destination

Here, we only subscribe to the /dc/group/memory_uptime topic

flb_stdout:

plugin: "dc_destinations/FlbStdout"

inputs: ["/dc/group/memory_uptime"]

Console output

In the terminal, you can see the result, published every 3 seconds (see the date field), which the is timeframe defined by sync_delay and the maximum polling_interval of the measurements.

Finally, note the new dictionary uses the key defined in the group_key measurement_server plugin configuration. They are transferred through the ROS message.

[component_container_isolated-1] [{"memory":{"used":71.67569732666016},"date":1677673405.921659,"uptime":{"time":96894},"run_id":"221"}]

[component_container_isolated-1] [{"memory":{"used":71.70790100097656},"date":1677673408.868847,"uptime":{"time":96897},"run_id":"221"}]

[component_container_isolated-1] [{"memory":{"used":71.72582244873047},"date":1677673411.869299,"uptime":{"time":96900},"run_id":"221"}]

[component_container_isolated-1] [{"memory":{"used":71.86643218994141},"date":1677673414.869292,"uptime":{"time":96903},"run_id":"221"}]

[component_container_isolated-1] [{"memory":{"used":71.82553863525391},"date":1677673417.869207,"uptime":{"time":96906},"run_id":"221"}]

Turtlebot3

In this example, we add a robot and start collecting robot data to Stdout.

You will also need 2 terminal windows, to:

- Run the Nav2 turtlebot3 launchfile: it starts localization, navigation and RViz

- Run DC

Since RViz is pretty verbose, using 2 terminal windows will help reading the JSON printed on the terminal window.

Setup the environment

In each, terminal, source your environment and setup turtlebot configuration:

source /opt/ros/humble/setup.bash

source install/setup.bash

export GAZEBO_MODEL_PATH=$GAZEBO_MODEL_PATH:/opt/ros/humble/share/turtlebot3_gazebo/models

export TURTLEBOT3_MODEL=waffle

Start Navigation

Then, start the Turtlebot launchfile:

ros2 launch nav2_bringup tb3_simulation_launch.py headless:=False

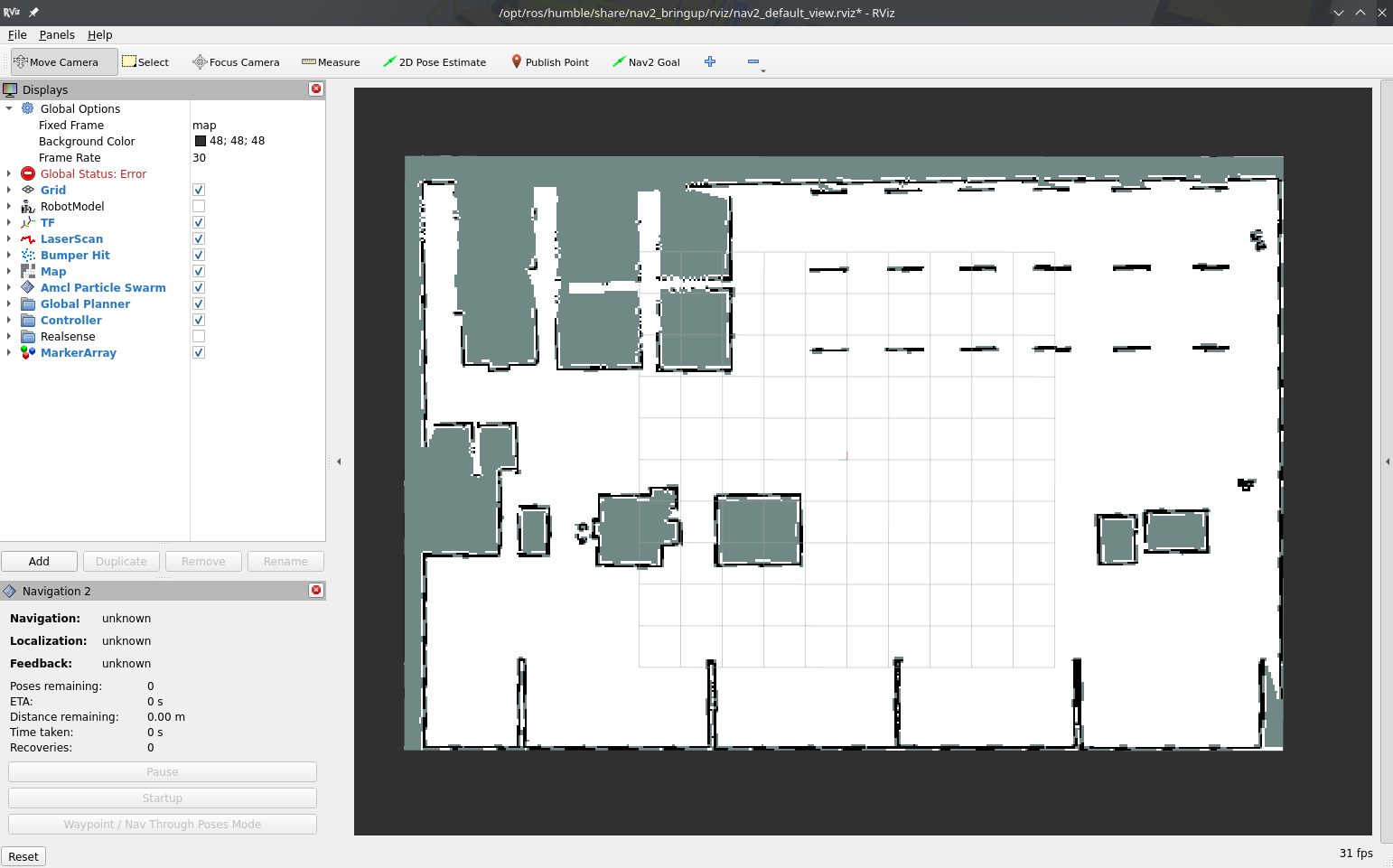

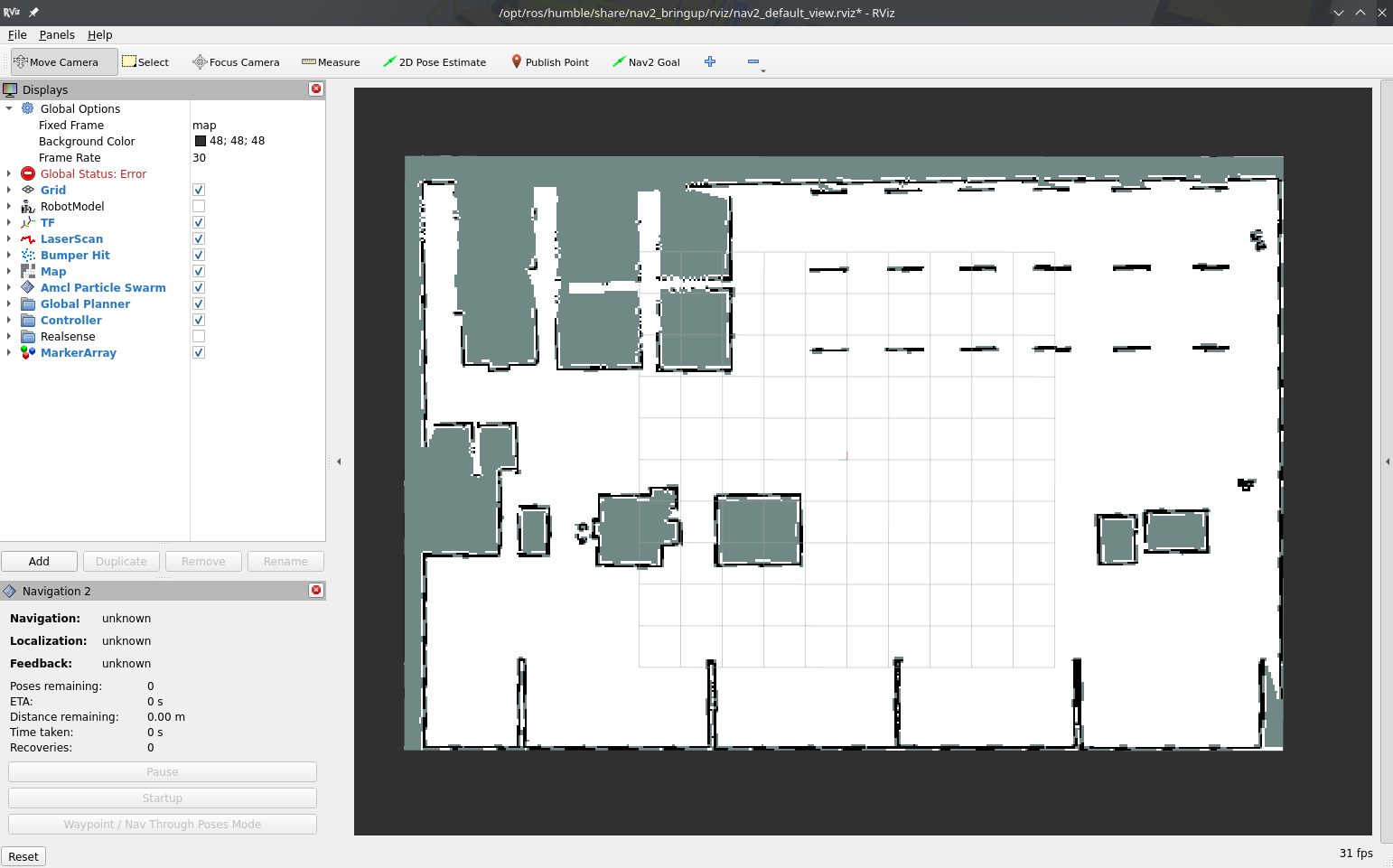

RViz and Gazebo will start: you should now see the robot in Gazebo, and the map on RViz.

Set the robot position using the "2D Pose Estimate" button.

If any problem occur, please take a look at the nav2 official documentation which covers the case.

Start DC

Execute

ros2 launch dc_demos tb3_simulation_stdout.launch.py

At the end, the data is displayed:

[component_container_isolated-1] [{"date":1677690777.96911,"width":384,"height":384,"remote_paths":{"minio":{"pgm":"C3PO/2023/03/01/17/map/2023-03-01T17:12:57.pgm","yaml":"C3PO/2023/03/01/17/map/2023-03-01T17:12:57.yaml"}},"resolution":0.05000000074505806,"origin":{"x":-10,"y":-10},"local_paths":{"pgm":"/root/dc_data/C3PO/2023/03/01/17/map/2023-03-01T17:12:57.pgm","yaml":"/root/dc_data/C3PO/2023/03/01/17/map/2023-03-01T17:12:57.yaml"},"id":"be781e5ffb1e7ee4f817fe7b63e92c32","robot_name":"C3PO","run_id":"234"}]

[component_container_isolated-1] [{"date":1677690782.883036,"width":384,"height":384,"remote_paths":{"minio":{"pgm":"C3PO/2023/03/01/17/map/2023-03-01T17:13:02.pgm","yaml":"C3PO/2023/03/01/17/map/2023-03-01T17:13:02.yaml"}},"resolution":0.05000000074505806,"origin":{"x":-10,"y":-10},"local_paths":{"pgm":"/root/dc_data/C3PO/2023/03/01/17/map/2023-03-01T17:13:02.pgm","yaml":"/root/dc_data/C3PO/2023/03/01/17/map/2023-03-01T17:13:02.yaml"},"id":"be781e5ffb1e7ee4f817fe7b63e92c32","robot_name":"C3PO","run_id":"234"}]

[component_container_isolated-1] [{"position":{"x":-0.7254206029057992,"yaw":0.134098723062189,"tags":["flb_stdout"],"y":-0.5116378019627142},"cmd_vel":{"tags":["flb_stdout"],"linear":{"x":0.26,"z":0,"y":0},"angular":{"x":0,"z":-0.157895,"y":0}},"speed":{"tags":["flb_stdout"],"linear":{"x":8.720555295508514e-05,"z":0,"y":4.219923511090644e-06},"computed":8.730759543499989e-05,"angular":{"x":-0.0002958005596118955}},"date":1677690787.878526,"id":"be781e5ffb1e7ee4f817fe7b63e92c32","robot_name":"C3PO","run_id":"234"}]

[component_container_isolated-1] [{"date":1677690787.883072,"width":384,"height":384,"remote_paths":{"minio":{"pgm":"C3PO/2023/03/01/17/map/2023-03-01T17:13:07.pgm","yaml":"C3PO/2023/03/01/17/map/2023-03-01T17:13:07.yaml"}},"resolution":0.05000000074505806,"origin":{"x":-10,"y":-10},"local_paths":{"pgm":"/root/dc_data/C3PO/2023/03/01/17/map/2023-03-01T17:13:07.pgm","yaml":"/root/dc_data/C3PO/2023/03/01/17/map/2023-03-01T17:13:07.yaml"},"id":"be781e5ffb1e7ee4f817fe7b63e92c32","robot_name":"C3PO","run_id":"234"}]

[component_container_isolated-1] [{"position":{"x":-0.1931822640325796,"yaw":-0.08553498481283986,"tags":["flb_stdout"],"y":-0.5267276846092974},"cmd_vel":{"tags":["flb_stdout"],"linear":{"x":0.232632,"z":0,"y":0},"angular":{"x":0,"z":-0.0526316,"y":0}},"speed":{"tags":["flb_stdout"],"linear":{"x":0.2466804589428482,"z":0,"y":4.147463233418404e-05},"computed":0.2466804624294339,"angular":{"x":-0.1575310923819759}},"date":1677690789.878385,"id":"be781e5ffb1e7ee4f817fe7b63e92c32","robot_name":"C3PO","run_id":"234"}]

[component_container_isolated-1] [{"position":{"x":-0.06732902212346159,"yaw":-0.06199357549188581,"tags":["flb_stdout"],"y":-0.5350783704643411},"cmd_vel":{"tags":["flb_stdout"],"linear":{"x":0.191579,"z":0,"y":0},"angular":{"x":0,"z":-0.0526316,"y":0}},"speed":{"tags":["flb_stdout"],"linear":{"x":0.2464715530337069,"z":0,"y":1.735682541426087e-05},"computed":0.2464715536448513,"angular":{"x":-0.05272644093984532}},"date":1677690790.878357,"id":"be781e5ffb1e7ee4f817fe7b63e92c32","robot_name":"C3PO","run_id":"234"}]

[component_container_isolated-1] [{"position":{"x":0.1426837169393564,"yaw":-0.1292500346335131,"tags":["flb_stdout"],"y":-0.5883037844577782},"cmd_vel":{"tags":["flb_stdout"],"linear":{"x":0,"z":0,"y":0},"angular":{"x":0,"z":0,"y":0}},"speed":{"tags":["flb_stdout"],"linear":{"x":0.1917414358977791,"z":0,"y":1.44170547699829e-05},"computed":0.1917414364397889,"angular":{"x":-0.05280628215810287}},"date":1677690791.878987,"id":"be781e5ffb1e7ee4f817fe7b63e92c32","robot_name":"C3PO","run_id":"234"}]

[component_container_isolated-1] [{"position":{"x":0.2658444927274801,"yaw":-0.1669572018597313,"tags":["flb_stdout"],"y":-0.6121168728850158},"cmd_vel":{"tags":["flb_stdout"],"linear":{"x":0,"z":0,"y":0},"angular":{"x":0,"z":0,"y":0}},"speed":{"tags":["flb_stdout"],"linear":{"x":3.645119603853573e-05,"z":0,"y":3.14134397110158e-06},"computed":3.658630528742332e-05,"angular":{"x":-0.0004012554003809027}},"date":1677690792.879082,"id":"be781e5ffb1e7ee4f817fe7b63e92c32","robot_name":"C3PO","run_id":"234"}]

[component_container_isolated-1] [{"date":1677690792.883126,"width":384,"height":384,"remote_paths":{"minio":{"pgm":"C3PO/2023/03/01/17/map/2023-03-01T17:13:12.pgm","yaml":"C3PO/2023/03/01/17/map/2023-03-01T17:13:12.yaml"}},"resolution":0.05000000074505806,"origin":{"x":-10,"y":-10},"local_paths":{"pgm":"/root/dc_data/C3PO/2023/03/01/17/map/2023-03-01T17:13:12.pgm","yaml":"/root/dc_data/C3PO/2023/03/01/17/map/2023-03-01T17:13:12.yaml"},"id":"be781e5ffb1e7ee4f817fe7b63e92c32","robot_name":"C3PO","run_id":"234"}

Given the JSON is quite large, let's analyze 2 different records:

The first one being the data published on the robot group:

[

{

"cmd_vel": {

"linear": {

"x": 0.246316,

"y": 0,

"z": 0

},

"angular": {

"x": 0,

"y": 0,

"z": 0.263158

}

},

"date": 1677694799.685468,

"position": {

"x": -0.6033772358727438,

"yaw": -0.7146495585355921,

"y": -1.633862970534114

},

"speed": {

"angular": {

"x": -0.0002542699063698451

},

"computed": 3.279051750818494e-05,

"linear": {

"x": 3.244397182637543e-05,

"y": 4.754653571390878e-06,

"z": 0

}

},

"name": "robot",

"id": "be781e5ffb1e7ee4f817fe7b63e92c32",

"robot_name": "C3PO",

"run_id": "240"

}

]

This record contains the speed, cmd_vel and position from the group "robot". Not that the tags for each measurement have been dropped. This is because they won't be used in this particular group (they can be used as a single measurement)

[

{

"width": 384,

"remote_paths": {

"minio": {

"yaml": "C3PO/2023/03/01/18/map/2023-03-01T18:20:16.yaml",

"pgm": "C3PO/2023/03/01/18/map/2023-03-01T18:20:16.pgm"

}

},

"name": "map",

"resolution": 0.05000000074505806,

"origin": {

"x": -10,

"y": -10

},

"local_paths": {

"yaml": "/root/dc_data/C3PO/2023/03/01/18/map/2023-03-01T18:20:16.yaml",

"pgm": "/root/dc_data/C3PO/2023/03/01/18/map/2023-03-01T18:20:16.pgm"

},

"date": 1677694816.690489,

"height": 384,

"id": "be781e5ffb1e7ee4f817fe7b63e92c32",

"robot_name": "C3PO",

"run_id": "240"

}

]

This record contains the map data from the measurement.

Configuration

Measurement

measurement_server:

ros__parameters:

custom_str_params: ["robot_name"]

robot_name: "C3PO"

measurement_plugins: ["cmd_vel", "map", "position", "speed"]

run_id:

enabled: true

counter: true

counter_path: "$HOME/run_id"

uuid: false

save_local_base_path: "$HOME/dc_data/"

all_base_path: "=robot_name/%Y/%m/%d/%H"

cmd_vel:

plugin: "dc_measurements/CmdVel"

tags: ["flb_stdout"]

group_key: "cmd_vel"

enable_validator: true

topic_output: "/dc/measurement/cmd_vel"

position:

plugin: "dc_measurements/Position"

tags: ["flb_stdout"]

group_key: "position"

topic_output: "/dc/measurement/position"

polling_interval: 1000

enable_validator: true

init_collect: true

global_frame: "map"

robot_base_frame: "base_link"

transform_timeout: 0.1

speed:

plugin: "dc_measurements/Speed"

tags: ["flb_stdout"]

group_key: "speed"

odom_topic: "/odom"

topic_output: "/dc/measurement/speed"

map:

plugin: "dc_measurements/Map"

tags: ["flb_stdout"]

group_key: "map"

polling_interval: 5000

save_path: "map/%Y-%m-%dT%H:%M:%S"

topic_output: "/dc/measurement/map"

save_map_timeout: 4.0

remote_prefixes: [""]

remote_keys: ["minio"]

save_local_base_path (Optional): Used as a common base for all saved files from measurement plugins. all_base_path is concatenated to it afterwards for defining the path where files are saved.

all_base_path (Optional): Used as a common base for some measurements to save files. Is concatenated to save_local_base_path. Note the =robot_name, which is later replaced by C3PO (the variable defined in custom_str_params)

map.remote_keys: creates a dictionary inside remote_paths which is named by the strings in this field, which contains fields used by your API to fetch the remote URLs of the files.

Group

group_server:

ros__parameters:

groups: ["robot"]

robot:

inputs:

[

"/dc/measurement/cmd_vel",

"/dc/measurement/position",

"/dc/measurement/speed",

]

output: "/dc/group/robot"

sync_delay: 5.0

group_key: "robot"

tags: ["flb_stdout"]

include_group_name: true

Create a group with data from cmd_vel, position and speed. Even though it appears there is nothing new here, I shall like to precise something important. In the previous demo, we mentioned that if all messages are not received by the group, it will drop it. It matters in this case because cmd_vel is not published all the time in this example (not when it is not moving), this means the data will be collected only when the robot moves (when a controller sends a command).

If you wished to collect the position and the speed constantly, you could take cmd_vel out of this group and add it in the destination.

Destinations

destination_server:

ros__parameters:

flb:

flush: 1

flb_grace: 1

log_level: "info"

storage_path: "/var/log/flb-storage/"

storage_sync: "full"

storage_checksum: "off"

storage_backlog_mem_limit: "1M"

scheduler_cap: 200

scheduler_base: 5

http_server: true

http_listen: "0.0.0.0"

http_port: 2020

in_storage_type: "filesystem"

in_storage_pause_on_chunks_overlimit: "off"

destination_plugins: ["flb_stdout"]

custom_str_params_list: ["robot_name", "id"]

custom_str_params:

robot_name:

name: robot_name

value: "C3PO"

# Requires systemd package

id:

name: id

value_from_file: /etc/machine-id

flb_stdout:

plugin: "dc_destinations/FlbStdout"

inputs: ["/dc/group/robot", "/dc/measurement/map"]

time_format: "double"

time_key: "date"

debug: false

Nothing new here, we simply edited the inputs in flb_stdout to ["/dc/group/robot", "/dc/measurement/map"] to get the data from the robot group and the map.

Console output

Now that the node started, let us see what's displayed in the console

[component_container_isolated-1] [INFO] [1677696067.792012776] [dc_container]: Load Library: /root/ws/install/dc_measurements/lib/libmeasurement_server_core.so

[component_container_isolated-1] [INFO] [1677696067.795921257] [dc_container]: Found class: rclcpp_components::NodeFactoryTemplate<measurement_server::MeasurementServer>

[component_container_isolated-1] [INFO] [1677696067.795956318] [dc_container]: Instantiate class: rclcpp_components::NodeFactoryTemplate<measurement_server::MeasurementServer>

[component_container_isolated-1] [INFO] [1677696067.811804437] [measurement_server]:

[component_container_isolated-1] measurement_server lifecycle node launched.

[component_container_isolated-1] Waiting on external lifecycle transitions to activate

[component_container_isolated-1] See https://design.ros2.org/articles/node_lifecycle.html for more information.

[component_container_isolated-1] [INFO] [1677696067.818755098] [measurement_server]: Base save path expanded to /root/dc_data/

[component_container_isolated-1] [INFO] [1677696067.818799565] [measurement_server]: All Base path expanded to C3PO/%Y/%m/%d/%H

[INFO] [launch_ros.actions.load_composable_nodes]: Loaded node '/measurement_server' in container 'dc_container'

[component_container_isolated-1] [INFO] [1677696067.821344112] [dc_container]: Load Library: /root/ws/install/dc_destinations/lib/libdestination_server_core.so

[component_container_isolated-1] [INFO] [1677696067.826089144] [dc_container]: Found class: rclcpp_components::NodeFactoryTemplate<destination_server::DestinationServer>

[component_container_isolated-1] [INFO] [1677696067.826138848] [dc_container]: Instantiate class: rclcpp_components::NodeFactoryTemplate<destination_server::DestinationServer>

[component_container_isolated-1] [INFO] [1677696067.845160790] [destination_server]:

[component_container_isolated-1] destination_server lifecycle node launched.

[component_container_isolated-1] Waiting on external lifecycle transitions to activate

[component_container_isolated-1] See https://design.ros2.org/articles/node_lifecycle.html for more information.

[INFO] [launch_ros.actions.load_composable_nodes]: Loaded node '/destination_server' in container 'dc_container'

[component_container_isolated-1] [INFO] [1677696067.856784495] [dc_container]: Load Library: /opt/ros/humble/lib/libnav2_lifecycle_manager_core.so

[component_container_isolated-1] [INFO] [1677696067.857836219] [dc_container]: Found class: rclcpp_components::NodeFactoryTemplate<nav2_lifecycle_manager::LifecycleManager>

[component_container_isolated-1] [INFO] [1677696067.857877118] [dc_container]: Instantiate class: rclcpp_components::NodeFactoryTemplate<nav2_lifecycle_manager::LifecycleManager>

[component_container_isolated-1] [INFO] [1677696067.875491851] [lifecycle_manager_navigation]: Creating

[INFO] [launch_ros.actions.load_composable_nodes]: Loaded node '/lifecycle_manager_navigation' in container 'dc_container'

[component_container_isolated-1] [INFO] [1677696067.881290824] [lifecycle_manager_navigation]: Creating and initializing lifecycle service clients

[component_container_isolated-1] [INFO] [1677696067.889491127] [lifecycle_manager_navigation]: Starting managed nodes bringup...

Measurement server and destination server are starting in the Lifecycle, you can read more about it here

"Base save path" and "All Base path" are also saved and expanded. Note "=robot_name" has been replaced by C3PO:

[component_container_isolated-1] [INFO] [1677696067.818755098] [measurement_server]: Base save path expanded to /root/dc_data/

[component_container_isolated-1] [INFO] [1677696067.818799565] [measurement_server]: All Base path expanded to C3PO/%Y/%m/%d/%H

Afterward, the measurement_server starts:

[component_container_isolated-1] [INFO] [1677694796.667666978] [lifecycle_manager_navigation]: Configuring measurement_server

[component_container_isolated-1] [INFO] [1677694796.667887325] [measurement_server]: Configuring

[component_container_isolated-1] [INFO] [1677694796.680348817] [measurement_server]: Creating measurement plugin cmd_vel: Type dc_measurements/CmdVel, Group key: cmd_vel, Polling interval: 1000, Debug: 0, Validator enabled: 1, Schema path: , Tags: [flb_stdout], Init collect: 1, Init Max measurement: 0, Include measurement name: 0, Include measurement plugin name: 0, Remote keys: , Remote prefixes: , Include measurement plugin name: 0, Max measurement on condition: 0, If all condition: , If any condition: , If none condition:

[component_container_isolated-1] [INFO] [1677694796.682517594] [measurement_server]: Configuring cmd_vel

[component_container_isolated-1] [INFO] [1677694796.684392643] [measurement_server]: Done configuring cmd_vel

[component_container_isolated-1] [INFO] [1677694796.684643767] [measurement_server]: Looking for schema at /root/ws/install/dc_measurements/share/dc_measurements/plugins/measurements/json/cmd_vel.json

[component_container_isolated-1] [INFO] [1677694796.684685350] [measurement_server]: schema: {"$defs":{"vector3":{"properties":{"x":{"description":"X speed","type":"number"},"y":{"description":"Y speed","type":"number"},"z":{"description":"Z speed","type":"number"}},"type":"object"}},"$schema":"http://json-schema.org/draft-07/schema#","description":"Command velocity sent to the robot","properties":{"angular":{"description":"Angular velocity as a vector","items":{"$ref":"#/$defs/vector3"},"type":"object"},"linear":{"description":"Linear velocity as a vector","items":{"$ref":"#/$defs/vector3"},"type":"object"}},"title":"Cmd_vel","type":"object"}

[component_container_isolated-1] [INFO] [1677694796.688143164] [measurement_server]: Creating measurement plugin map: Type dc_measurements/Map, Group key: map, Polling interval: 5000, Debug: 0, Validator enabled: 1, Schema path: , Tags: [flb_stdout], Init collect: 1, Init Max measurement: 0, Include measurement name: 1, Include measurement plugin name: 0, Remote keys: minio, Remote prefixes: , Include measurement plugin name: 0, Max measurement on condition: 0, If all condition: , If any condition: , If none condition:

[component_container_isolated-1] [INFO] [1677694796.689090088] [measurement_server]: Configuring map

[component_container_isolated-1] [INFO] [1677694796.690180661] [measurement_server]: Done configuring map

[component_container_isolated-1] [INFO] [1677694796.690522923] [measurement_server]: Looking for schema at /root/ws/install/dc_measurements/share/dc_measurements/plugins/measurements/json/map.json

[component_container_isolated-1] [INFO] [1677694796.690587274] [measurement_server]: schema: {"$defs":{"origin":{"description":"The 2-D pose of the lower-left pixel in the map, as (x, y, yaw), with yaw as counterclockwise rotation (yaw=0 means no rotation). Many parts of the system currently ignore yaw.","properties":{"x":{"description":"X origin of the robot","type":"number"},"y":{"description":"Y origin of the robot","type":"number"}},"type":"object"},"paths":{"properties":{"pgm":{"description":"Path to the map PGM file containing the gray-scale image","type":"string"},"yaml":{"description":"Path to the map YAML file containing map metadata","type":"string"}},"type":"object"}},"$schema":"http://json-schema.org/draft-07/schema#","description":"Map saved metadata and paths","properties":{"height":{"description":"Height of the PGM","minimum":0,"type":"integer"},"local_paths":{"description":"Paths where metadata and image are stored","items":{"$ref":"#/$defs/paths"},"type":"object"},"origin":{"description":"Robot origin position in meters","items":{"$ref":"#/$defs/origin"},"type":"object"},"remote_paths":{"additionalProperties":{"items":{"$ref":"#/$defs/paths"},"type":"object"},"description":"Dictionary of paths where metadata and image will be remotely stored","type":"object"},"resolution":{"description":"Resolution of the map, meters/pixel","minimum":0,"type":"number"},"width":{"description":"Width of the PGM","minimum":0,"type":"integer"}},"title":"Map","type":"object"}

[component_container_isolated-1] [INFO] [1677694796.693890952] [measurement_server]: Creating measurement plugin position: Type dc_measurements/Position, Group key: position, Polling interval: 1000, Debug: 0, Validator enabled: 1, Schema path: , Tags: [flb_stdout], Init collect: 1, Init Max measurement: 0, Include measurement name: 0, Include measurement plugin name: 0, Remote keys: , Remote prefixes: , Include measurement plugin name: 0, Max measurement on condition: 0, If all condition: , If any condition: , If none condition:

[component_container_isolated-1] [INFO] [1677694796.694797887] [measurement_server]: Configuring position

[component_container_isolated-1] [INFO] [1677694796.695799962] [measurement_server]: Done configuring position

[component_container_isolated-1] [INFO] [1677694796.696060904] [measurement_server]: Looking for schema at /root/ws/install/dc_measurements/share/dc_measurements/plugins/measurements/json/position.json

[component_container_isolated-1] [INFO] [1677694796.696114257] [measurement_server]: schema: {"$schema":"http://json-schema.org/draft-07/schema#","description":"Position and orientation of the robot","properties":{"x":{"description":"X position of the robot","type":"number"},"y":{"description":"Y position of the robot","type":"number"},"yaw":{"description":"Yaw angle of the robot","type":"number"}},"title":"Position","type":"object"}

[component_container_isolated-1] [INFO] [1677694796.699956883] [measurement_server]: Creating measurement plugin speed: Type dc_measurements/Speed, Group key: speed, Polling interval: 1000, Debug: 0, Validator enabled: 1, Schema path: , Tags: [flb_stdout], Init collect: 1, Init Max measurement: 0, Include measurement name: 0, Include measurement plugin name: 0, Remote keys: , Remote prefixes: , Include measurement plugin name: 0, Max measurement on condition: 0, If all condition: , If any condition: , If none condition:

[component_container_isolated-1] [INFO] [1677694796.701722429] [measurement_server]: Configuring speed

[component_container_isolated-1] [INFO] [1677694796.703057966] [measurement_server]: Done configuring speed

[component_container_isolated-1] [INFO] [1677694796.703357744] [measurement_server]: Looking for schema at /root/ws/install/dc_measurements/share/dc_measurements/plugins/measurements/json/speed.json

[component_container_isolated-1] [INFO] [1677694796.703413430] [measurement_server]: schema: {"$defs":{"vector3":{"properties":{"x":{"description":"X speed","type":"number"},"y":{"description":"Y speed","type":"number"},"z":{"description":"Z speed","type":"number"}},"type":"object"}},"$schema":"http://json-schema.org/draft-07/schema#","description":"Computed, linear and angular speed of the robot","properties":{"angular":{"description":"Angular velocity as a vector","items":{"$ref":"#/$defs/vector3"},"type":"object"},"computed":{"description":"Computed speed in meter/s","type":"number"},"linear":{"description":"Linear velocity as a vector","items":{"$ref":"#/$defs/vector3"},"type":"object"}},"title":"Speed","type":"object"}

Plugins are loaded one by one (here only one is, the uptime one) and configured. The configuration for each is displayed and the validation schema is also loaded and its path printed.

Then, the destination_server starts:

[component_container_isolated-1] [INFO] [1677694796.706019709] [lifecycle_manager_navigation]: Configuring destination_server

[component_container_isolated-1] [INFO] [1677694796.706162231] [destination_server]: Configuring

[component_container_isolated-1] [INFO] [1677694796.706907595] [destination_server]: Fluent Bit service initialized

[component_container_isolated-1] [INFO] [1677694796.707868171] [destination_server]: Creating destination plugin flb_stdout: Type dc_destinations/FlbStdout, Debug: 0, Time format: double. Time key: date

[component_container_isolated-1] [INFO] [1677694796.709554983] [destination_server]: Configuring Flb plugin flb_stdout

[component_container_isolated-1] [INFO] [1677694796.710263626] [destination_server]: Loaded lua filter. Match=ros2, code=function concatenate(tag, timestamp, record) if (type(record["tags"]) == "table") then record["tags"] = table.concat(record["tags"], ",") end return 2, timestamp, record end

[component_container_isolated-1] [INFO] [1677694796.710309154] [destination_server]: Loaded rewrite_tag filter. Match=ros2, Rule=$tags .*(flb_stdout).* flb_stdout true

[component_container_isolated-1] [INFO] [1677694796.710382723] [destination_server]: Done configuring Flb plugin flb_stdout

[component_container_isolated-1] [INFO] [1677694796.710401937] [destination_server]: Loading input ros2 shared library /root/ws/install/fluent_bit_plugins/lib/flb-in_ros2.so...

[component_container_isolated-1] [INFO] [1677694796.711494520] [destination_server]: Loaded input ros2 shared library /root/ws/install/fluent_bit_plugins/lib/flb-in_ros2.so

[component_container_isolated-1] [INFO] [1677694796.711617719] [destination_server]: Flb ros2 plugin initialized. ret=0

[component_container_isolated-1] [INFO] [1677694796.711638189] [destination_server]: Starting Flb engine...

[component_container_isolated-1] [2023/03/01 18:19:56] [ info] [fluent bit] version=2.0.7, commit=1ab360f79c, pid=79925

[component_container_isolated-1] [2023/03/01 18:19:56] [ info] [storage] ver=1.3.0, type=memory+filesystem, sync=full, checksum=off, max_chunks_up=128

[component_container_isolated-1] [2023/03/01 18:19:56] [ info] [storage] backlog input plugin: storage_backlog.1

[component_container_isolated-1] [2023/03/01 18:19:56] [ info] [cmetrics] version=0.5.7

[component_container_isolated-1] [2023/03/01 18:19:56] [ info] [ctraces ] version=0.2.5

[component_container_isolated-1] [2023/03/01 18:19:56] [ info] [input:ros2:ros2.0] initializing

[component_container_isolated-1] [2023/03/01 18:19:56] [ info] [input:ros2:ros2.0] storage_strategy='filesystem' (memory + filesystem)

[component_container_isolated-1] [2023/03/01 18:19:56] [ info] Started node fluentbit_rclc

[component_container_isolated-1] [2023/03/01 18:19:56] [ info] Created subscriber /dc/group/robot

[component_container_isolated-1] [2023/03/01 18:19:56] [ info] Created subscriber /dc/measurement/map

[component_container_isolated-1] [2023/03/01 18:19:56] [ info] [input:storage_backlog:storage_backlog.1] initializing

[component_container_isolated-1] [2023/03/01 18:19:56] [ info] [input:storage_backlog:storage_backlog.1] storage_strategy='memory' (memory only)

[component_container_isolated-1] [2023/03/01 18:19:56] [ info] [input:storage_backlog:storage_backlog.1] queue memory limit: 976.6K

[component_container_isolated-1] [2023/03/01 18:19:56] [ info] [input:emitter:emitter_for_rewrite_tag.2] initializing

[component_container_isolated-1] [2023/03/01 18:19:56] [ info] [input:emitter:emitter_for_rewrite_tag.2] storage_strategy='filesystem' (memory + filesystem)

[component_container_isolated-1] [2023/03/01 18:19:56] [ info] [output:stdout:stdout.0] worker #0 started

[component_container_isolated-1] [2023/03/01 18:19:56] [ info] [http_server] listen iface=0.0.0.0 tcp_port=2020

[component_container_isolated-1] [2023/03/01 18:19:56] [ info] [sp] stream processor started

[component_container_isolated-1] [INFO] [1677694796.763937083] [destination_server]: Started Flb engine

All the same as with previous demos:

It starts the FlbStdout destination plugin, filters used internally by Fluent to edit Timestamps and tags. It then loads the ROS 2 Fluent Bit shared library and initialize it with the topics we provided as parameter.

Afterward, Fluent Bit starts, it prints its version, storage strategy and buffer configuration and lists which plugins are loaded.

Then, the measurement and destination server nodes are activated:

[component_container_isolated-1] [INFO] [1677668906.745180268] [lifecycle_manager_navigation]: Activating measurement_server

[component_container_isolated-1] [INFO] [1677668906.745462265] [measurement_server]: Activating

[component_container_isolated-1] [INFO] [1677668906.745558665] [measurement_server]: Activating uptime

[component_container_isolated-1] [INFO] [1677668906.746014639] [measurement_server]: Creating bond (measurement_server) to lifecycle manager.

[component_container_isolated-1] [INFO] [1677668906.853199899] [lifecycle_manager_navigation]: Server measurement_server connected with bond.

[component_container_isolated-1] [INFO] [1677668906.853316226] [lifecycle_manager_navigation]: Activating destination_server

[component_container_isolated-1] [INFO] [1677668906.853620571] [destination_server]: Activating

[component_container_isolated-1] [INFO] [1677668906.853722659] [destination_server]: Activating flb_stdout

[component_container_isolated-1] [INFO] [1677668906.853796078] [destination_server]: Creating bond (destination_server) to lifecycle manager.

[component_container_isolated-1] [INFO] [1677668906.961666207] [lifecycle_manager_navigation]: Server destination_server connected with bond.

[component_container_isolated-1] [INFO] [1677668906.961777773] [lifecycle_manager_navigation]: Managed nodes are active

[component_container_isolated-1] [INFO] [1677668906.961820078] [lifecycle_manager_navigation]: Creating bond timer...

Finally, we see the data:

[component_container_isolated-1] [{"remote_paths":{"minio":{"pgm":"C3PO/2023/03/01/18/map/2023-03-01T18:19:56.pgm","yaml":"C3PO/2023/03/01/18/map/2023-03-01T18:19:56.yaml"}},"date":1677694796.765904,"height":384,"name":"map","origin":{"x":-10,"y":-10},"local_paths":{"pgm":"/root/dc_data/C3PO/2023/03/01/18/map/2023-03-01T18:19:56.pgm","yaml":"/root/dc_data/C3PO/2023/03/01/18/map/2023-03-01T18:19:56.yaml"},"resolution":0.05000000074505806,"width":384,"id":"be781e5ffb1e7ee4f817fe7b63e92c32","robot_name":"C3PO","run_id":"240"}]

[component_container_isolated-1] [{"cmd_vel":{"linear":{"x":0.246316,"y":0,"z":0},"angular":{"x":0,"y":0,"z":0.263158}},"date":1677694799.685468,"position":{"x":-0.6033772358727438,"yaw":-0.7146495585355921,"y":-1.633862970534114},"speed":{"angular":{"x":-0.0002542699063698451},"computed":3.279051750818494e-05,"linear":{"x":3.244397182637543e-05,"y":4.754653571390878e-06,"z":0}},"name":"robot","id":"be781e5ffb1e7ee4f817fe7b63e92c32","robot_name":"C3PO","run_id":"240"}]

[component_container_isolated-1] [{"cmd_vel":{"linear":{"x":0.246316,"y":0,"z":0},"angular":{"x":0,"y":0,"z":0.263158}},"date":1677694800.685094,"position":{"x":-0.5307920077356227,"yaw":-0.645872441707418,"y":-1.693502983783379},"speed":{"angular":{"x":0.2127361022319145},"computed":0.2459393937023935,"linear":{"x":0.2459393844826374,"y":-6.734242603700924e-05,"z":0}},"name":"robot","id":"be781e5ffb1e7ee4f817fe7b63e92c32","robot_name":"C3PO","run_id":"240"}]

[component_container_isolated-1] [{"cmd_vel":{"linear":{"x":0.26,"y":0,"z":0},"angular":{"x":0,"y":0,"z":0.368421}},"date":1677694801.685017,"position":{"x":-0.3195900881047479,"yaw":-0.3944485529461182,"y":-1.813946939716471},"speed":{"angular":{"x":0.2623361481938653},"computed":0.24584231041888,"linear":{"x":0.2458423037516516,"y":-5.725533732485466e-05,"z":0}},"name":"robot","id":"be781e5ffb1e7ee4f817fe7b63e92c32","robot_name":"C3PO","run_id":"240"}]

So...what happened?

- The Nav2 turtlebot3 simulation starts, a robot is able to localize and move (once you use the 2-D pose estimate on RViz)

- The measurement plugins start publishing data to /dc/measurement/map, /dc/measurement/cmd_vel, /dc/measurement/position and /dc/measurement/speed, which contain the JSONs, tags and timestamp of the message

- In parallel, each time the map plugin sends a ROS message, it also saves the files on the filesystem. Open a file browser to the path you set in the configuration to a path mentioned in the map JSON

- The "robot" group node subscribes to /dc/measurement/cmd_vel, /dc/measurement/position and /dc/measurement/speed and publish on /dc/group/robot when it collects data from all 3 topics

- Run ID and robot_name is appended in the JSON of each

- The ROS 2 Fluent Bit plugin, which subscribes to the topics, receive the data and pass it on to Fluent Bit

- Fluent Bit receives it, applies the timestamp filter (which modifies the timestamp to the desired format)

- Fluent Bit applies changes the tag with another filter. This tag is used to match to the destination afterward.

- Data is flushed

- The Fluent Bit stdout output plugin receives the data because tags match (the flb_stdout tag is used) and forwards it to Stdout

Turtlebot3

In this example, we add a robot and start collecting robot data to Stdout.

You will also need 3 terminal windows, to:

- Run the Nav2 turtlebot3 launchfile: it starts localization, navigation and RViz

- Run navigation inspection demo

- Run DC

Using a different terminal window for DC helps reading its information.

Packages in the workspace

In addition to the ros2_data_collection repo in your workspace, you will need to download the aws warehouse package:

cd src

git clone https://github.com/aws-robotics/aws-robomaker-small-warehouse-world.git -b ros2

cd ..

colcon build

Setup the environment

Python dependencies

For this tutorial, we will need to install all dependencies:

pip3 install -r requirements.txt -r requirements-dev.txt

Setup Infrastructure

MinIO

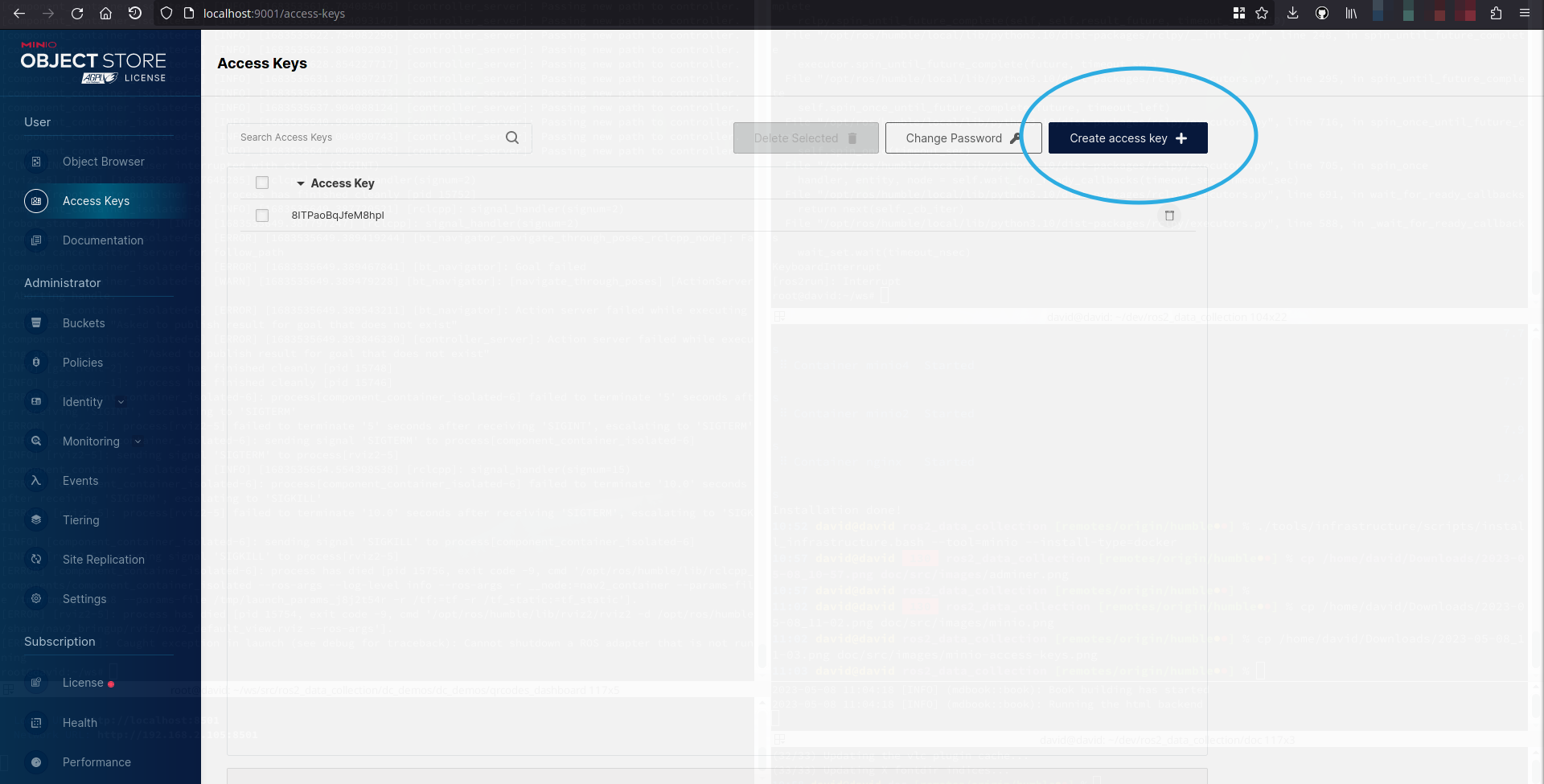

MinIO will be used as storage for images and other files. To start it, follow the steps

Once you have your keys, edit the yaml configuration file at dc_demos/params/tb3_simulation_pgsql_minio.yaml by editing all instances of:

- access_key_id

- secret_access_key

PostgreSQL

PostgreSQL will be used as database storage for our JSON. Later on, backend engineers can make requests on those JSON based on measurement requested and time range. To start it, follow the steps

The default yaml configuration file does not need change as it also uses default values.

Setup simulation environment

In the terminal 1, source your environment, setup turtlebot configuration:

source /opt/ros/humble/setup.bash

source install/setup.bash

export GAZEBO_MODEL_PATH=$GAZEBO_MODEL_PATH:/opt/ros/humble/share/turtlebot3_gazebo/models

export GAZEBO_RESOURCE_PATH=${PWD}/src/aws-robomaker-small-warehouse-world/

export TURTLEBOT3_MODEL=waffle

source /usr/share/gazebo/setup.bash

Verify the gazebo world can be loaded properly:

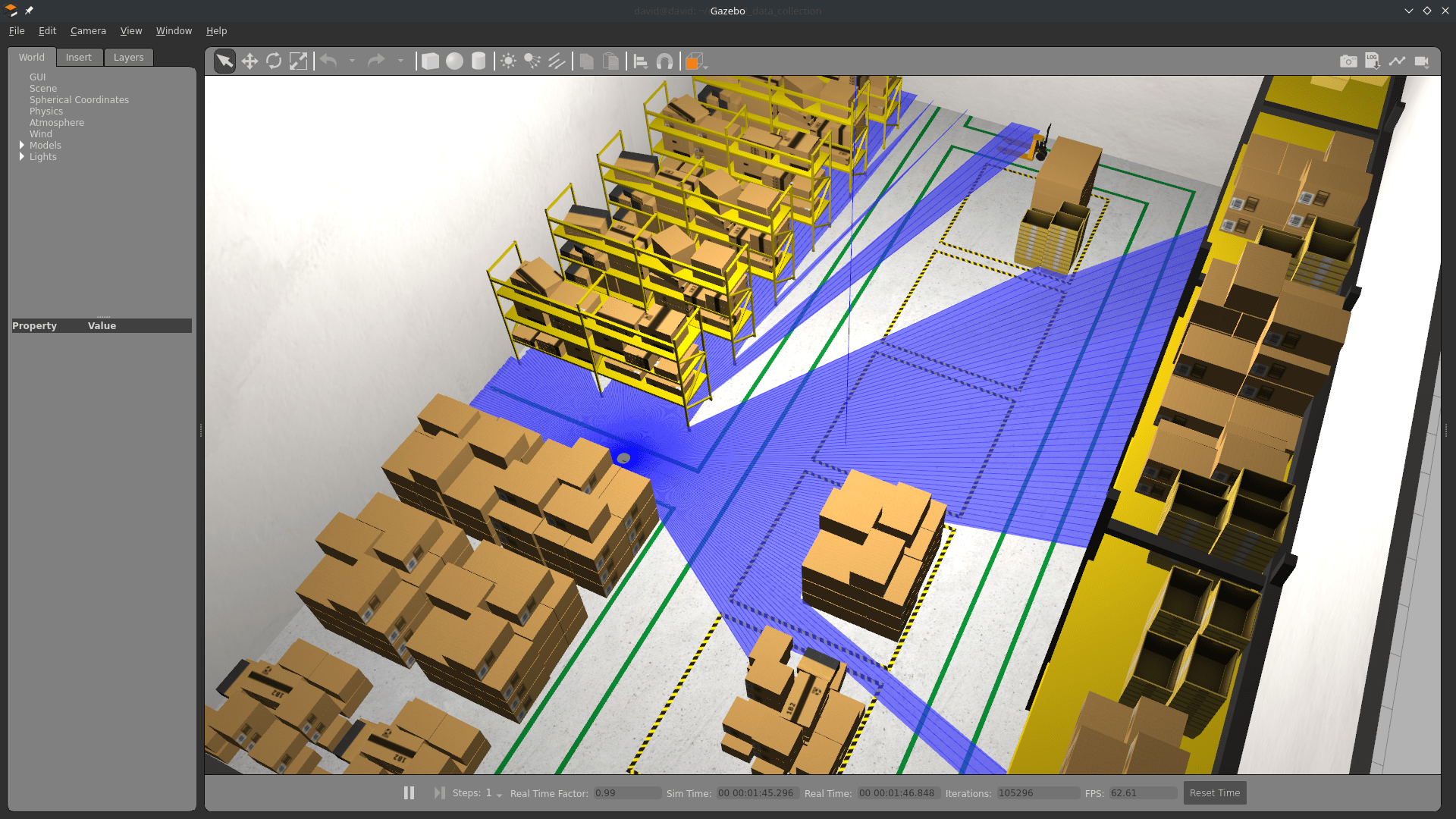

gazebo /opt/ros/humble/share/aws_robomaker_small_warehouse_world/worlds/small_warehouse/small_warehouse.world

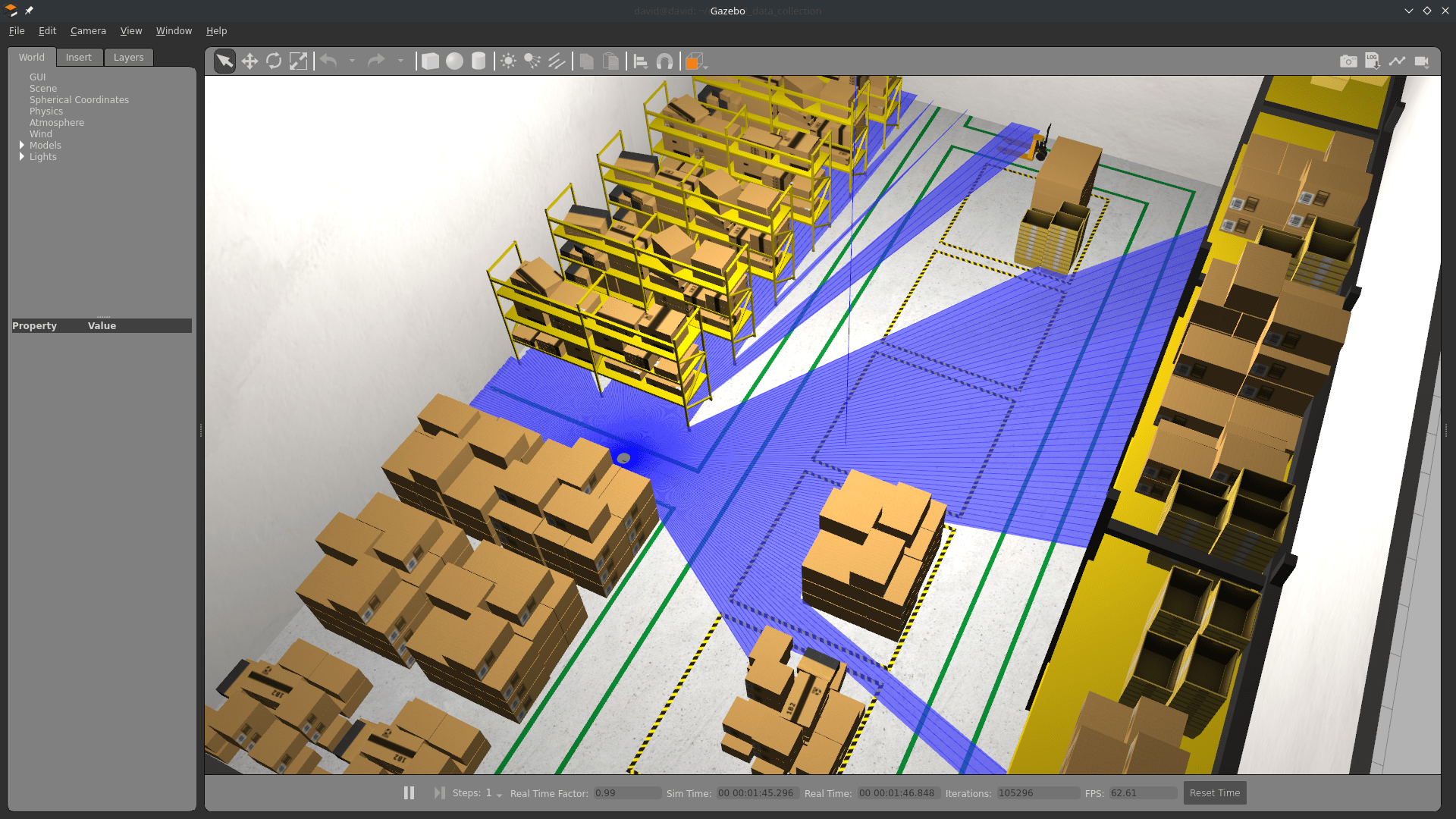

Gazebo will start with the warehouse environment. You can close it now.

I believe requiring the source along with those export are needed because of this issue

Terminal 1: Start Navigation

Then, in the same terminal (1), start the Turtlebot launchfile:

ros2 launch nav2_bringup tb3_simulation_launch.py \

world:=/opt/ros/humble/share/aws_robomaker_small_warehouse_world/worlds/no_roof_small_warehouse/no_roof_small_warehouse.world \

map:=/opt/ros/humble/share/aws_robomaker_small_warehouse_world/maps/005/map.yaml \

headless:=False \

x_pose:=3.45 \

y_pose:=2.15 \

yaw:=3.14

RViz and Gazebo will start: now you see the robot in Gazebo, and the map on RViz.

Terminal 2: Start DC

Run colcon build to compile the workspace:

colcon build

Now, start the demo:

ros2 launch dc_demos tb3_simulation_pgsql_minio.launch.py

The robot will start collecting data.

Terminal 3: Start autonomous navigation

Execute

ros2 run nav2_simple_commander demo_security

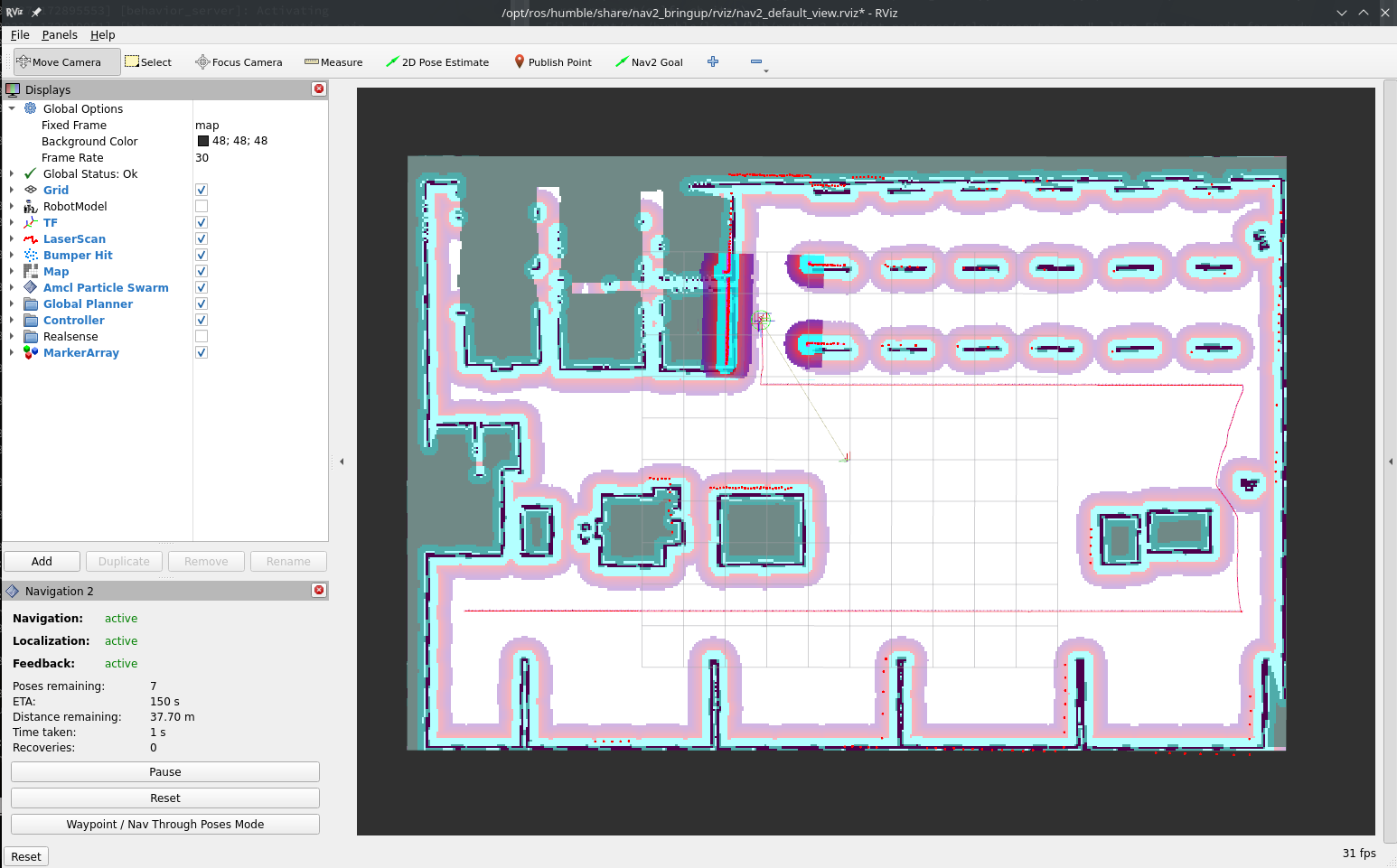

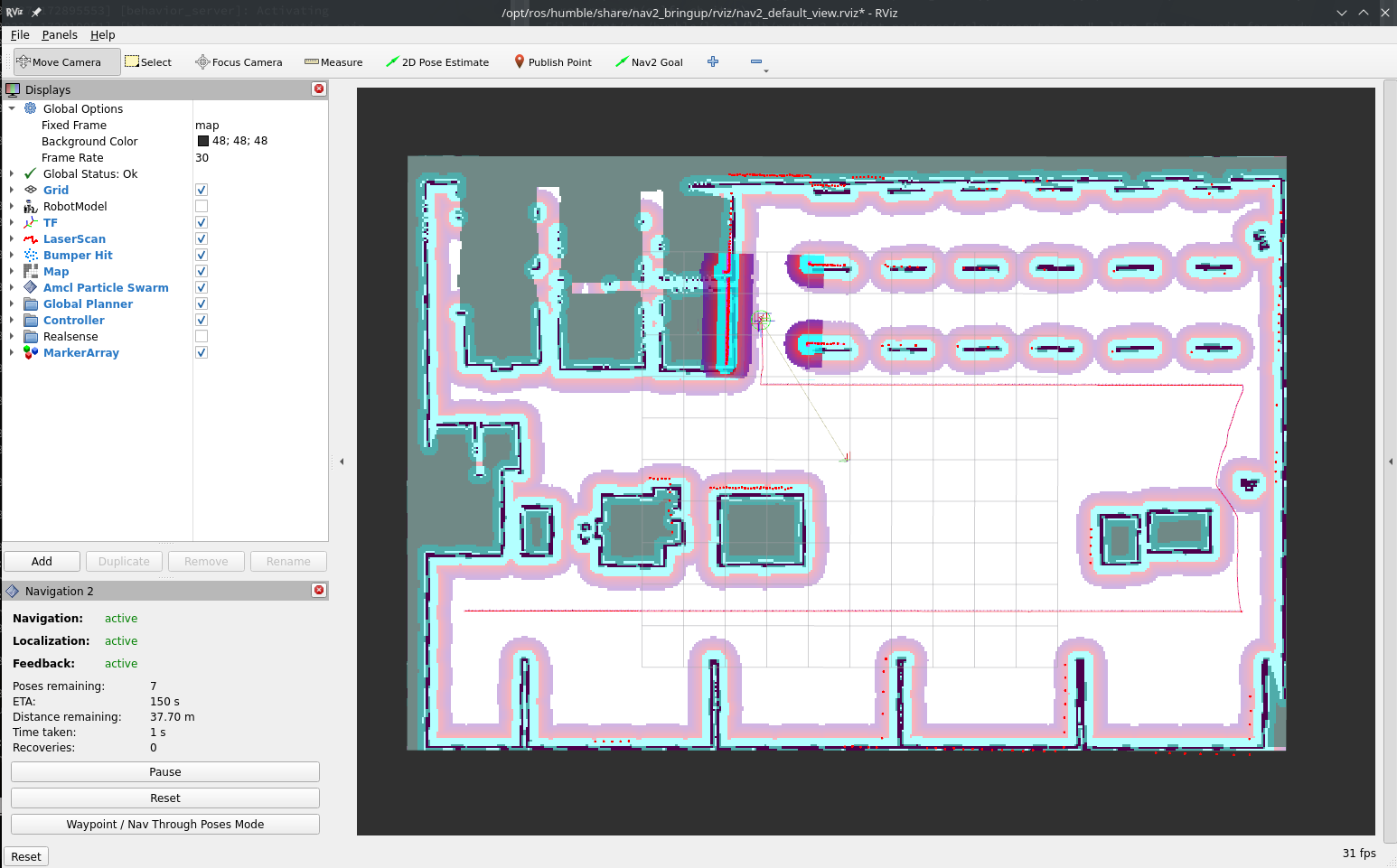

The robot will start moving and you will be able to see all visualizations activated in RViz:

Visualize the data

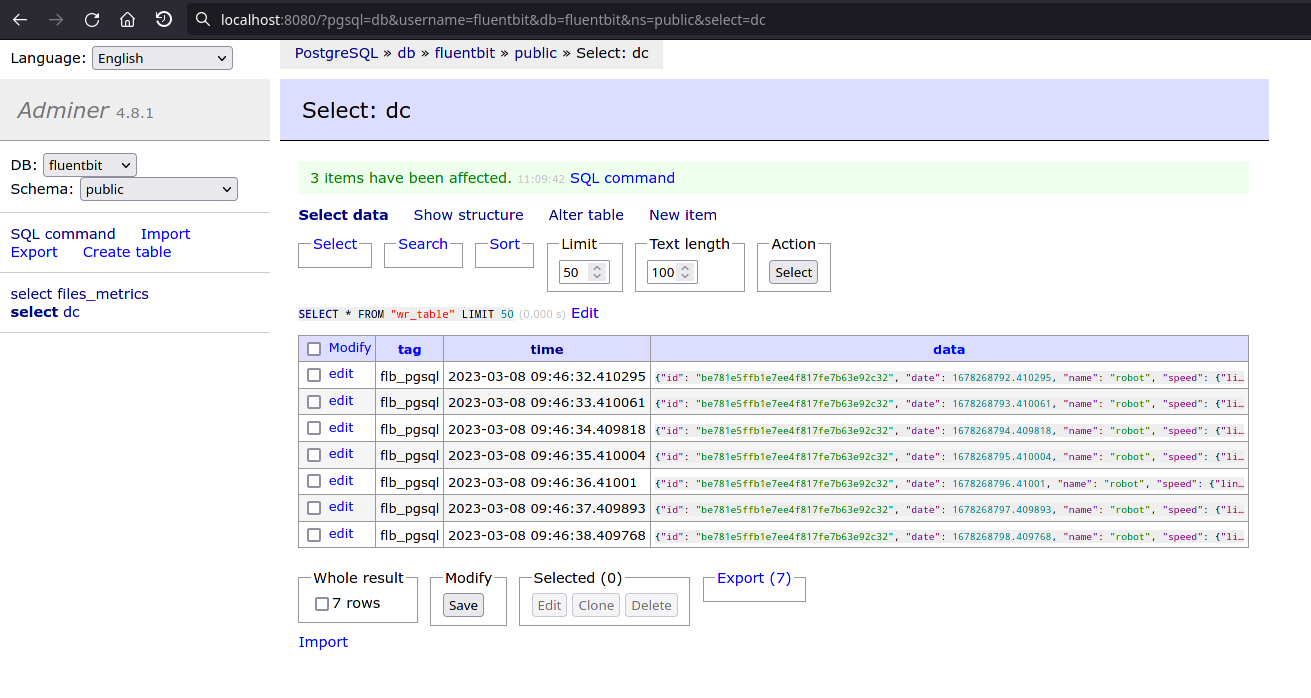

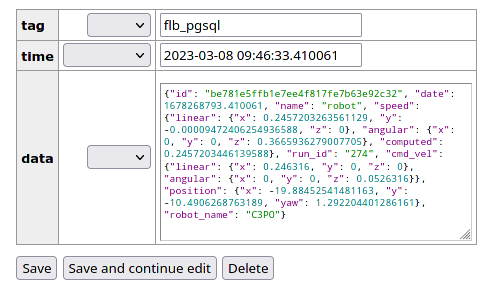

In the database

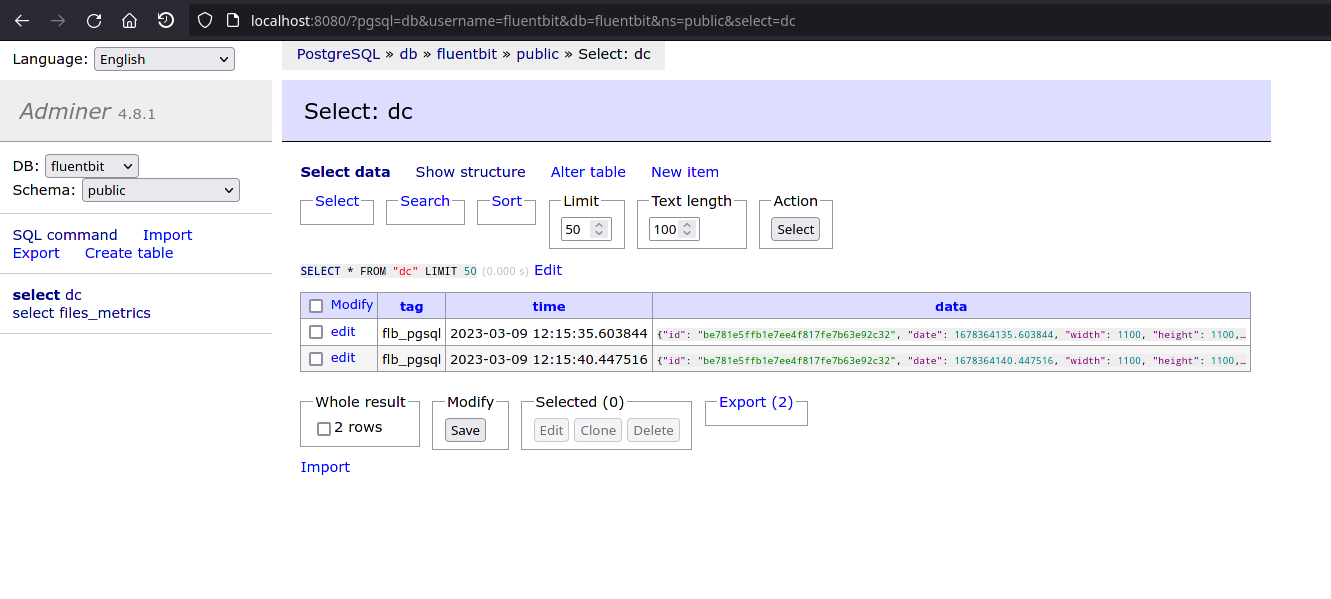

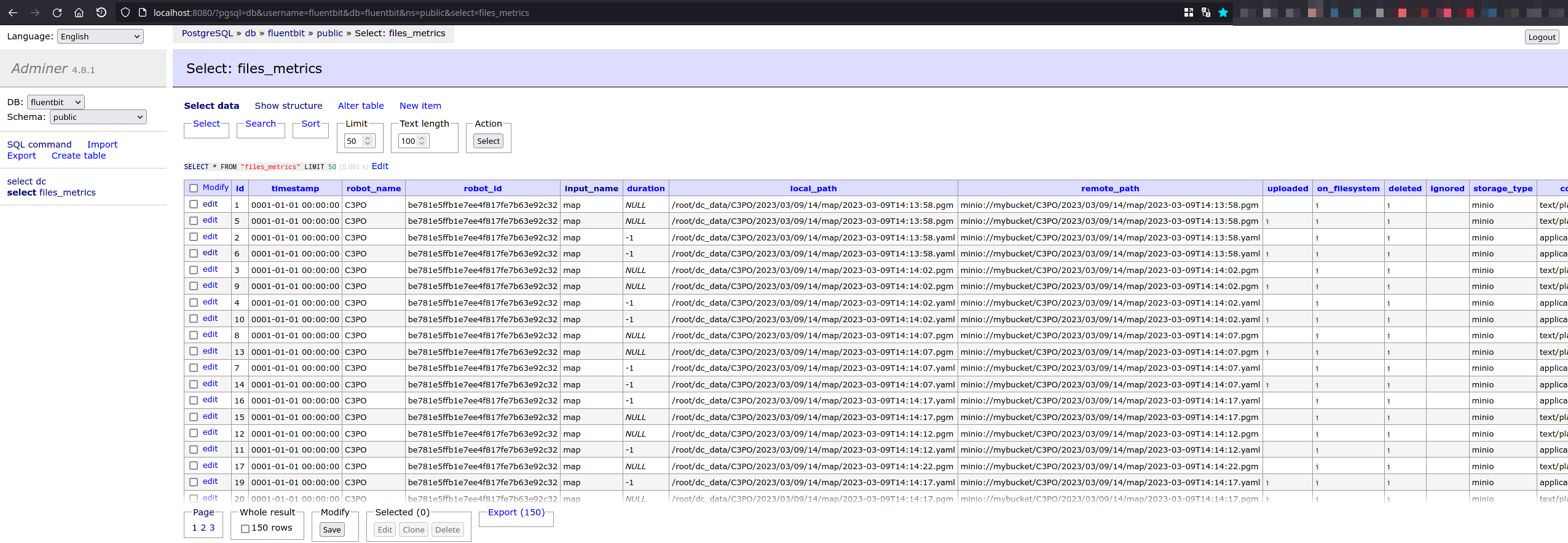

Navigate to localhost:8080

- Select dc database

- Select dc table

- Click on Select data

You will see rows filling the database. You can click on one to see its content:

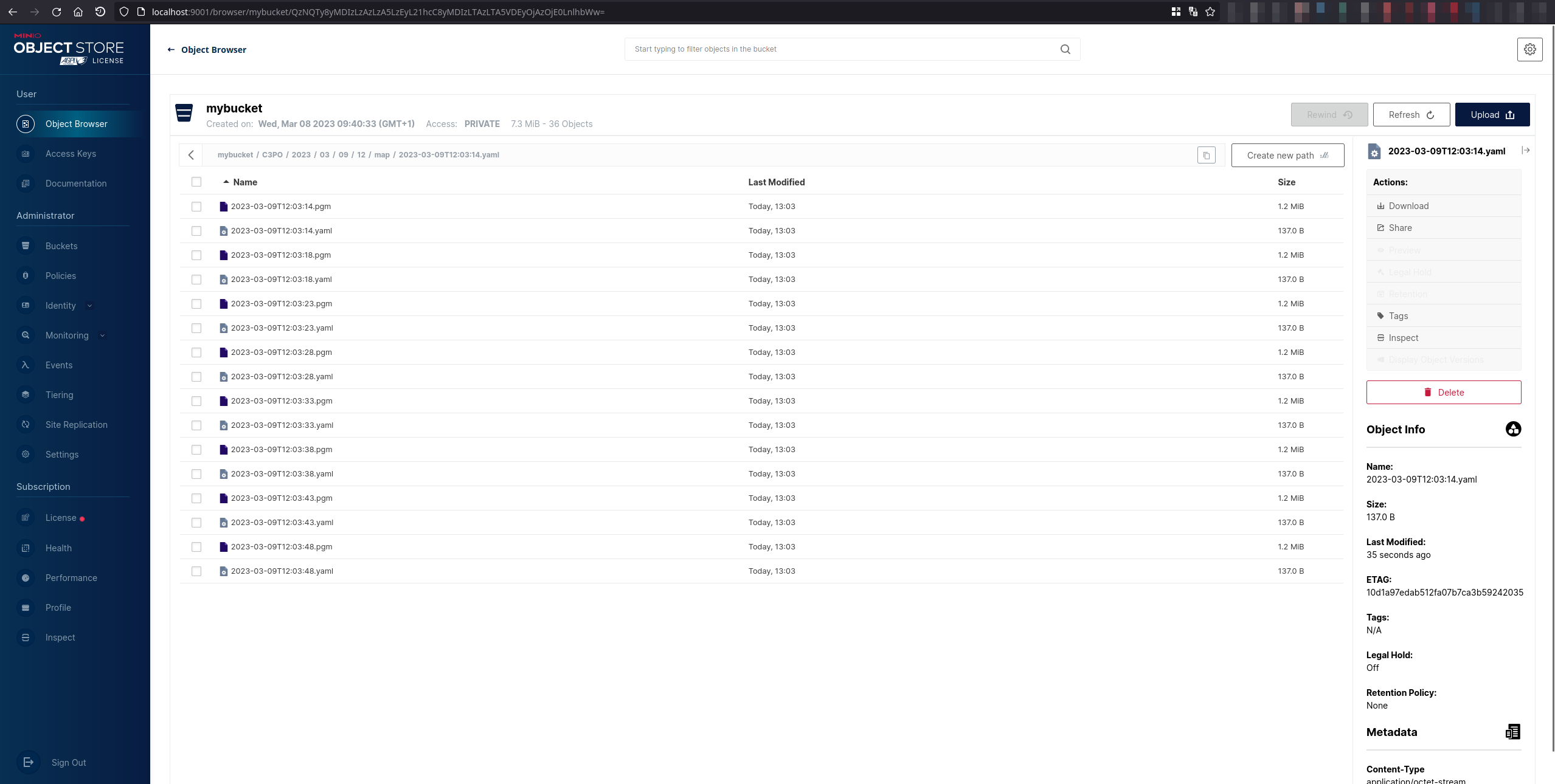

In MinIO (file storage)

Navigate to localhost:9001

- Select mybucket bucket

- Click until you reach camera or map

- Continue until you reached a file, and click on preview (pgm can't be previewed)

You will see rows filling the database. You can click on one to see its content:

With Streamlit dashboard

A dashboard has been developed to help visualize the data collected.

Execute in a new terminal:

cd src/ros2_data_collection/dc_demos/dc_demos/streamlit_dashboard/

# Replace by what was created at the start of this demo

export MINIO_ACCESS_KEY=<YOUR_ACCESS_KEY>

# Replace by what was created at the start of this demo

export MINIO_SECRET_KEY=<YOUR_SECRET_KEY>

streamlit run Streamlit_ROS_2.py

# This will be printed:

You can now view your Streamlit app in your browser.

Local URL: http://localhost:8502

Network URL: http://192.168.2.105:8502

Reach the address on your browser: localhost:8502. Here is a video of it:

That's it! Now you can collect your data!

Understanding the configuration

The full configuration file can be found here.

Measurement server

Measurements

measurement_plugins sets which plugin to load. We collect

System measurements:

Robot measurements:

Environment measurements:

Infrastructure measurements:

Each has their own configuration: polling interval, source topic, destination paths, topics used as input etc. Going through each of them would be too long here but you can check for each measurement its documentation and the general documentation of measurements

Conditions

We also initialize conditions:

- min_distance_traveled

- max_distance_traveled

They are used in the distance traveled measurement to only take values in a certain range.

Destination server

Here we enable the PostgreSQL and MinIO plugins since it is where we send the data.

PostgreSQL destination

We pass all topics generated by measurements. The node will subscribe to it and transfer the data to PostgreSQL. Note that not all data need to be sent to PostgreSQL, some could be sent elsewhere.

Along with the inputs, we pass the server configuration: username, password, database name etc.

MinIO destination

We pass the topics we want to subscribe to. Here only map and camera since those are the ones generating files.

The src_fields and upload_fields are fields in the JSON sent by the measurement where local and remote paths are stored so the plugin knows where to put the files.

Turtlebot3

In this example, we add a robot and start collecting robot data to Stdout.

You will also need 3 terminal windows, to:

- Run the Nav2 turtlebot3 launchfile: it starts localization, navigation and RViz

- Run navigation inspection demo

- Run DC

Using a different terminal window for DC helps reading its information.

Packages in the workspace

In addition to the ros2_data_collection repo in your workspace, you will need to download the aws warehouse package:

cd src

git clone https://github.com/aws-robotics/aws-robomaker-small-warehouse-world.git -b ros2

cd ..

colcon build

Setup the environment

Python dependencies

For this tutorial, we will need to install all dependencies:

pip3 install -r requirements.txt -r requirements-dev.txt

Setup the infrastructure

InfluxDB

InfluxDB will be used to store our data and timestamps. Later on, backend engineers can make requests on those JSON based on measurement requested and time range. To start it, follow the steps

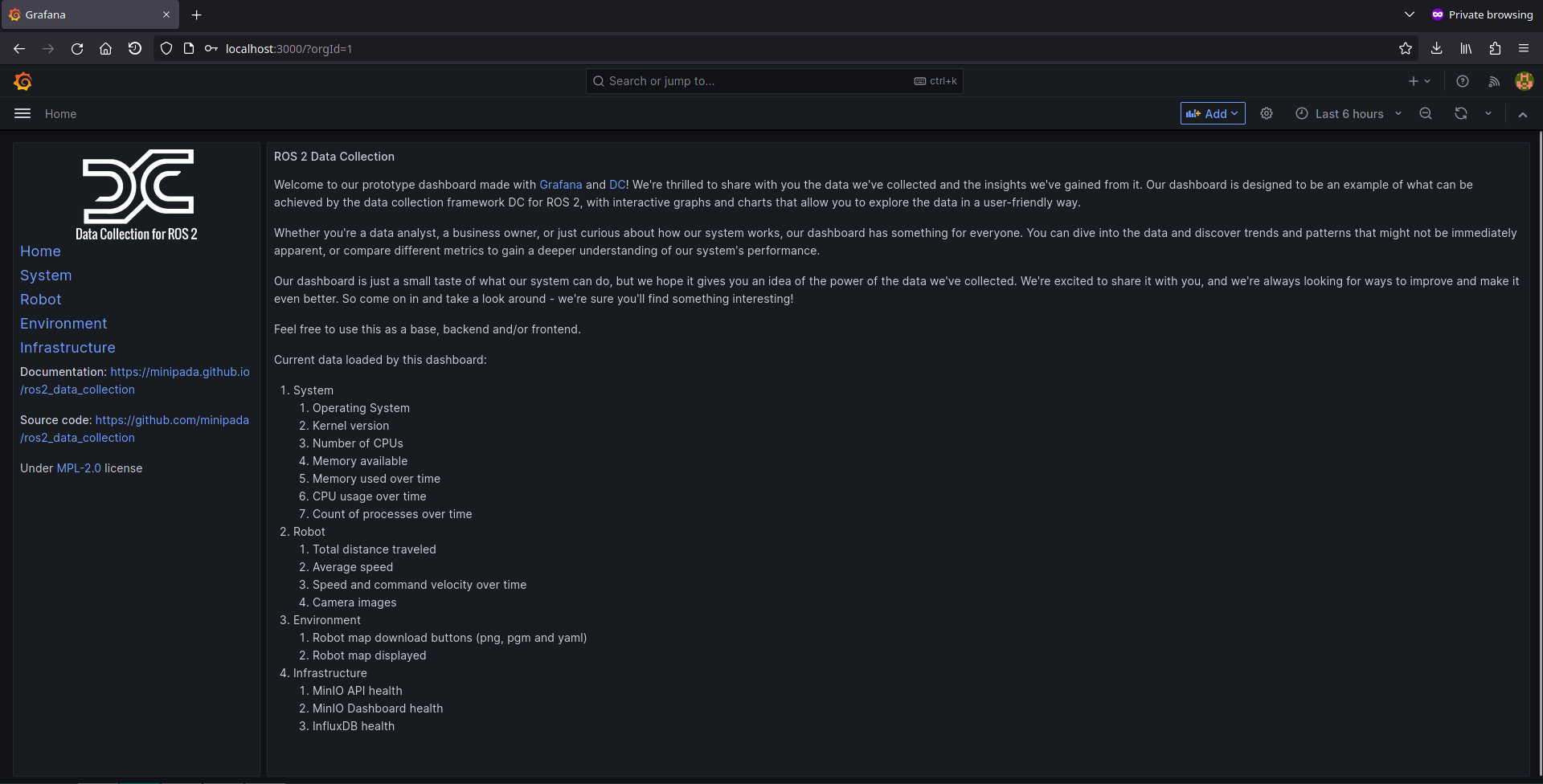

Grafana

Grafana will be used to display the data as timeseries or statistics.

Setup simulation environment

In the terminal 1, source your environment, setup turtlebot configuration:

source /opt/ros/humble/setup.bash

source install/setup.bash

export GAZEBO_MODEL_PATH=$GAZEBO_MODEL_PATH:/opt/ros/humble/share/turtlebot3_gazebo/models

export GAZEBO_RESOURCE_PATH=${PWD}/src/aws-robomaker-small-warehouse-world/

export TURTLEBOT3_MODEL=waffle

source /usr/share/gazebo/setup.bash

Verify the gazebo world can be loaded properly:

gazebo /opt/ros/humble/share/aws_robomaker_small_warehouse_world/worlds/no_roof_small_warehouse/no_roof_small_warehouse.world

Gazebo will start with the warehouse environment. You can close it now.

I believe requiring the source along with those export are needed because of this issue

Terminal 1: Start Navigation

Then, in the same terminal (1), start the Turtlebot launchfile:

ros2 launch nav2_bringup tb3_simulation_launch.py \

world:=/opt/ros/humble/share/aws_robomaker_small_warehouse_world/worlds/no_roof_small_warehouse/no_roof_small_warehouse.world \

map:=/opt/ros/humble/share/aws_robomaker_small_warehouse_world/maps/005/map.yaml \

headless:=False \

x_pose:=3.45 \

y_pose:=2.15 \

yaw:=3.14

RViz and Gazebo will start: now you see the robot in Gazebo, and the map on RViz.

Terminal 2: Start DC

Run colcon build to compile the workspace:

colcon build

Now, start the demo:

ros2 launch dc_demos tb3_simulation_influxdb.launch.py

The robot will start collecting data.

Terminal 3: Start autonomous navigation

Execute

ros2 run nav2_simple_commander demo_security

The robot will start moving and you will be able to see all visualizations activated in RViz:

Visualize the data

With Grafana Dashboard